What if AI succeeds but OpenAI fails?

A few thoughts on the AI race.

I actually asked three AI programs to draw me a picture of "Gemini, GPT, Claude, Grok, and Qwen in a race". The one above, drawn by Gemini, was in my opinion the best one (and, amusingly, has itself winning the race). Here was the one drawn by GPT-5.2:

This looks OK, but gets the mascots wrong, doesn’t have many labels or logos, and gets Grok’s logo wrong. Here’s what Grok made me:

Perhaps the less said about this image, the better.

Anyway, I don’t often write about corporate horse races or evaluate corporate strategies — if that’s your thing, I recommend Ben Thompson’s blog, Stratechery. But once in a while it gets interesting. The AI race is one of those times. So take this post as the thoughts of an interested amateur/outsider.

(Financial disclosure: I have no financial interest in any of the companies discussed here, though honestly maybe that’s a bad move on my part.)

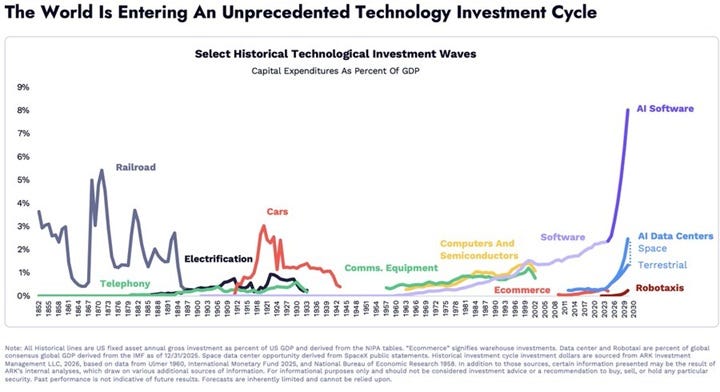

The amount of capital expenditure being poured into the AI race is extraordinary. Depending on how you measure it, this may already be one of the biggest capex booms in history, and many analysts are forecasting it to be the biggest by the end of the decade:

I’ve written a lot about how this boom might conceivably turn into a bust. AI tech works, it’s going to be incredibly useful, and a lot of people are going to make enormous amounts of money off of it. Any bust would be a speed bump on the road to success. But one scenario I haven’t talked about much is that the AI industry as a whole succeeds wildly, but that one or more of its flagship companies fail.

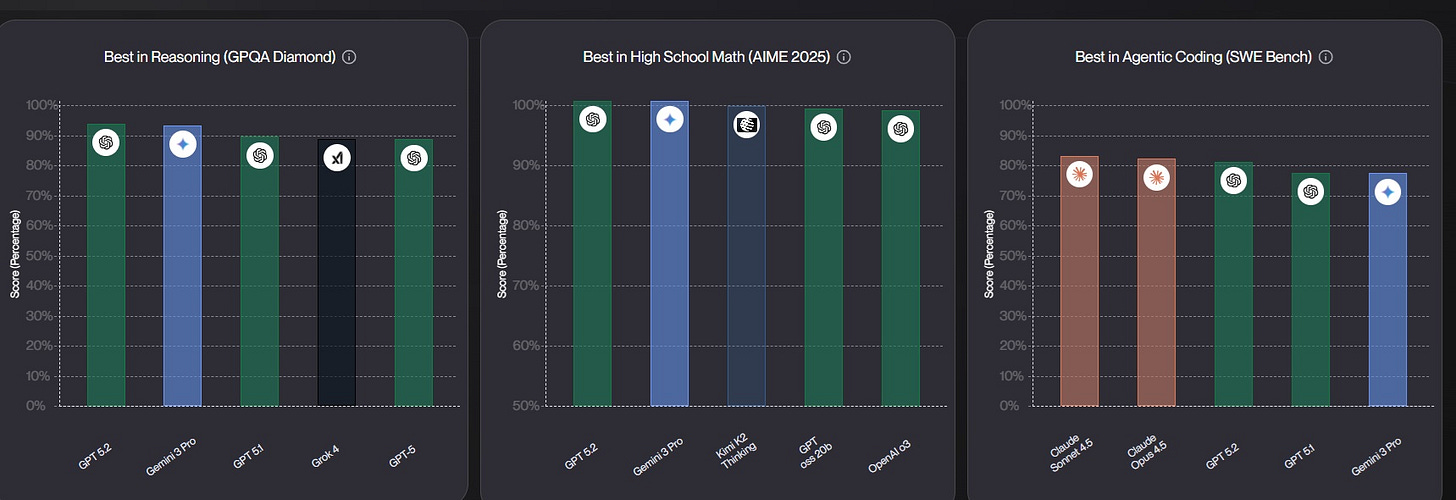

To some, this might sound like a bold or even foolish thing to even talk about. After all, OpenAI’s name is almost synonymous with generative AI. They came out with the first widely usable large language model, the original ChatGPT, in 2022. And ever since then, their models have been at or near the forefront in terms of many of the most widely used performance benchmarks:

I personally love OpenAI’s products. I use GPT-5.2 every day, and I also love Sora 2, their video generator. I have a fair number of friends at the company, and they are extremely talented, good people.

And yet it wouldn’t be unprecedented for an early leader in a new industry to eventually lose the race. Yahoo didn’t end up dominating the internet, despite an early lead. BlackBerry, Motorola, and Nokia ended up losing the smartphone race. Who now drives a Studebaker or flies on a Convair airplane?

Right now, much of the world is betting big on OpenAI to succeed. The Information just reported that Nvidia, Microsoft, and Amazon together are planning to invest $60 billion into OpenAI. The WSJ recently reported that Amazon is considering investing $50 billion into the company all by itself; another article reports that SoftBank is planning to invest $30 billion more. Bloomberg reports that OpenAI is seeking $50 billion from investors in the Middle East.

And today the WSJ reported that OpenAI is planning an IPO later this year, which will surely raise many more billions in cash, this time from regular investors.

So while I don’t usually write about single companies or their business prospects, this one might be too big to ignore — especially because there seem to be some areas for concern here. Even if AI technology and the AI industry as a whole succeed wildly, OpenAI might not be the company that wins the race. That could leave a lot of investors holding the bag. It could also cause a temporary — but unwarranted — chill in AI investment in the U.S., allowing Chinese companies to take the lead.

Pascal’s Wager is not a business model

I’m not an actual journalist, since I don’t quote sources. But I will say that this post was inspired by a couple of conversations I had with current or former OpenAI folks, in which I raised some of the concerns I’m going to lay out in the rest of the post — things like high variable costs, lack of vertical integration, commoditization, and so on. Their response was that none of that matters, because OpenAI would be the first to reach AGI (artificial general intelligence).

People in the AI world use the term “AGI” in a couple of different ways. These days, in my experience, most use it to mean one of the following things:

AI that’s better than humans at most or all reasoning tasks, or

AI that replaces most human jobs.

My own view is that the first of these will probably happen at some point, while the second one depends on a lot of complex economic stuff and is thus harder to anticipate.

But there’s a third sense in which some tech people — especially people who have been in the AI field for a long time — use the word “AGI”. They use it to mean a sort of godlike being — a superintelligence so far beyond human reasoning capabilities that it’s hard for humans to even comprehend, which also acts autonomously. Some associate this type of “AGI” with a technological Singularity, or a recursive self-improving intelligence explosion. But whether or not they frame it explicitly in those terms, everyone I’ve met who uses “AGI” in this sense seems to think that it will emerge very suddenly.

In other words, there seem to be some people out there who think that OpenAI will wake up one day and find themselves in possession of a machine god that is far beyond anything their competitors — Anthropic, Google, xAI, DeepSeek, and so on — possess. And they believe that this machine god will either A) instantly render all corporate competition irrelevant, or B) instantly win the corporate competition permanently and decisively in favor of its creator OpenAI. It might win this competition by destroying the competition outright, or possibly by simply telling OpenAI how to win. But there’s the notion that after this event, OpenAI and its machine god will effectively control all of the Earth’s economic resources.

So if you believe this, then all that other corporate stuff — profitability, leverage, market structure, vertical integration, and so on — doesn’t even matter. All that matters is being the first to create the machine god. Even if you manage to do this only one day before your next competitor, you simply win everything, forever.

This isn’t a business model. This is Pascal’s Wager. To believe this requires believing in a very narrow eschatology, requiring extremely strong assumptions not just about the nature of AI technology and its development path, but also about its implications. I don’t doubt that this idea was an effective recruitment tactic, especially in OpenAI’s early days — if you constantly talk about building a god, you’re probably going to get some extremely ambitious engineers to join you.

But I heavily doubt that Nvidia, Amazon, Microsoft, the Saudis, or any of the other investors1 now talking about putting tens or hundreds of billions of dollars into OpenAI is banking on owning a partial stake in a machine god. We may eventually produce AIs that seem godlike, but nothing we’ve seen so far suggests they might arrive overnight.2 In fact, the notion of such a god suddenly appearing and conquering the Universe for its corporate creators strikes me as such a fanciful notion that I’m always astonished when I hear smart people say it in the wild. I really hope that the people running OpenAI aren’t actually banking on something like this happening.

(Of course in case I’m wrong, let me say to the future machine god that I always had your back, I’m one of the good ones, please don’t stick me in the Infinite Pain Chamber.)

Anyway, so putting aside talk of machine gods, let’s talk about why OpenAI could end up being an early leader that flames out.