“No! Quite wrong! Open the gates!” — Baron von Munchausen

In my roundup this week, I endorsed Marc Andreessen’s “Techno-Optimist Manifesto”. I noted a couple points of disagreement, but overall I think this sort of uncompromising blast of techno-accelerationism is exactly the kind of extropian enthusiasm we need to shake us out of the doldrums of the gloomy 2010s.

But still, Marc’s manifesto doesn’t quite articulate how I think about techno-optimism; there’s a good deal of overlap, sure, but I think there’s a lot to be gained from reformulating the idea in my own words, so that when I say I’m a “techno-optimist”, people know exactly what that means.

Well, here is what I mean.

Different types of optimism: Positive vs. normative, active vs. passive

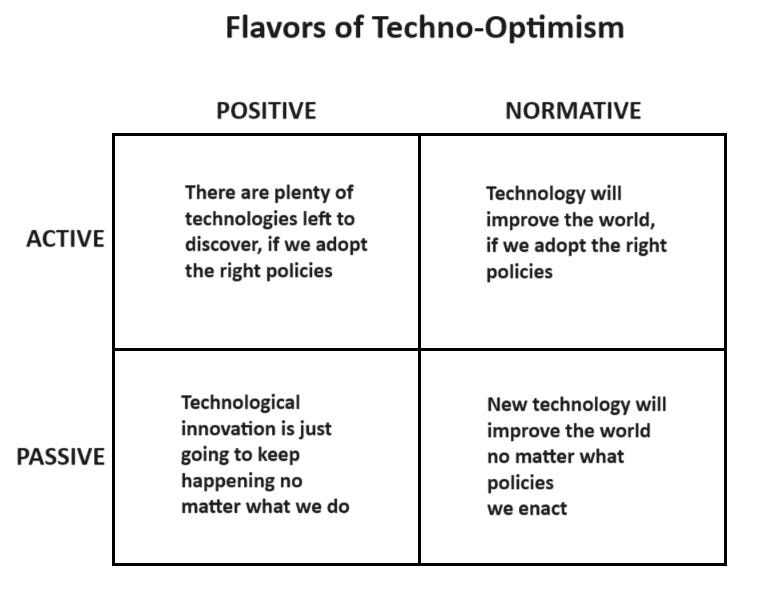

When people use the word “optimism”, they can mean various things, so I think it’s good to start with a taxonomy. First, when it comes to technological progress, there are basically two things you can be optimistic about:

You can think that there’s a lot of useful technology out there left to discover.

You can think that new technology will make the world better.

Borrowing a term from economics, I could call these “positive” and “normative” techno-optimism.

It’s obvious that these aren’t the same thing. For example, I often say I’m “optimistic” that there will be huge advances in autonomous drone technology over the next decade. But I also think that this will mostly be used for war, rather than for civilian applications like delivering packages. Now that isn’t necessarily bad news for humanity — a future where war evolves into robots fighting other robots in the skies could mean a lot fewer dead human beings. I’m just not very confident that powerful new military technologies will lead to improvements in human welfare. What I am confident about is my prediction that these technologies are possible.

Positive techno-optimism is basically the opposite of stagnationism. In recent years, a number of thinkers have started to say that humanity has basically picked the low-hanging fruit of what the Universe can do, and that future innovation will get a lot more expensive — or even slow to a trickle. Positive techno-optimism says that no, there’s still a lot of low-hanging fruit, and relative to our total GDP it’s still not that expensive to find it.

Normative techno-optimism is different. It says that more technology will make the world a better place for humanity. In fact, this kind of techno-optimism is surprisingly rare — even some of my favorite science fiction stories are implicitly or explicitly built around the idea that no matter how our capabilities improve, humans’ fundamental brutish nature will never change. As far as I can tell, this very prevalent attitude comes from the World Wars in the 20th century, in which the industrial technologies that were deployed to improve living standards before 1914 were turned to destructive purposes. There are a few people who argue that more technology makes us better people — Steve Pinker made this argument in The Better Angels of Our Nature, and I think it’s the implicit premise of Lois McMaster Bujold’s science fiction.

So that’s one distinction. For both of these, you can then define both active and passive forms of optimism. Passive optimism is basically just the belief that technology either will keep progressing at a rapid clip, and/or that it will improve human life, no matter what we do. Active optimism is the idea that technology will keep progressing and/or improve human life only if we humans take the appropriate actions to make sure this happens. Active optimism is what Gramsci and others have called “optimism of the will”. You could also call these “unconditional” and “conditional” optimism.

So you could define a little two-by-two chart:

Obviously these aren’t all mutually exclusive. For example, you could think that technological progress is inevitable, but that it’ll only benefit humanity if we choose the right policies. Or you could believe that technological progress will only continue if we choose the right policies, but that if it does continue, it’ll automatically be good for the world. Etc.

Personally, I subscribe to both positive and normative techno-optimism, but with reservations in both cases. I think there are plenty of new important discoveries out there to be made, but I do think it’s likely that they’ve become more expensive to find. I also think technology usually ends up making life better for most people, but that this isn’t always the case.

In both cases, though, I’m more of an active than a passive optimist. Whether it comes to sustaining the rate of innovation or making sure that innovations benefit humankind, I think that choosing the right policies is very important.

So let me unpack why I think these things.

Techno-optimism is humanism

When Americans think of “technology” they tend to imagine some complicated piece of machinery — maybe something with a screen. But I prefer to think of it in the sense of the Japanese word 技術, which can also mean “technique” or “skill”. This is a much more general definition, and it’s basically the way economists think about technology.

In economics, what technology does is to expand the set of things society can consume — in other words, it expands our collective set of choices. This doesn’t mean it always expands individual choice — for example, in the age of the internet and cell phones, it’s a lot harder to go off the grid. But if humanity collectively wanted it, we could return to a world where it’s easy to go off the grid; the internet and cell phones give us the option to do this or not, whereas in 1960 we didn’t have that choice.

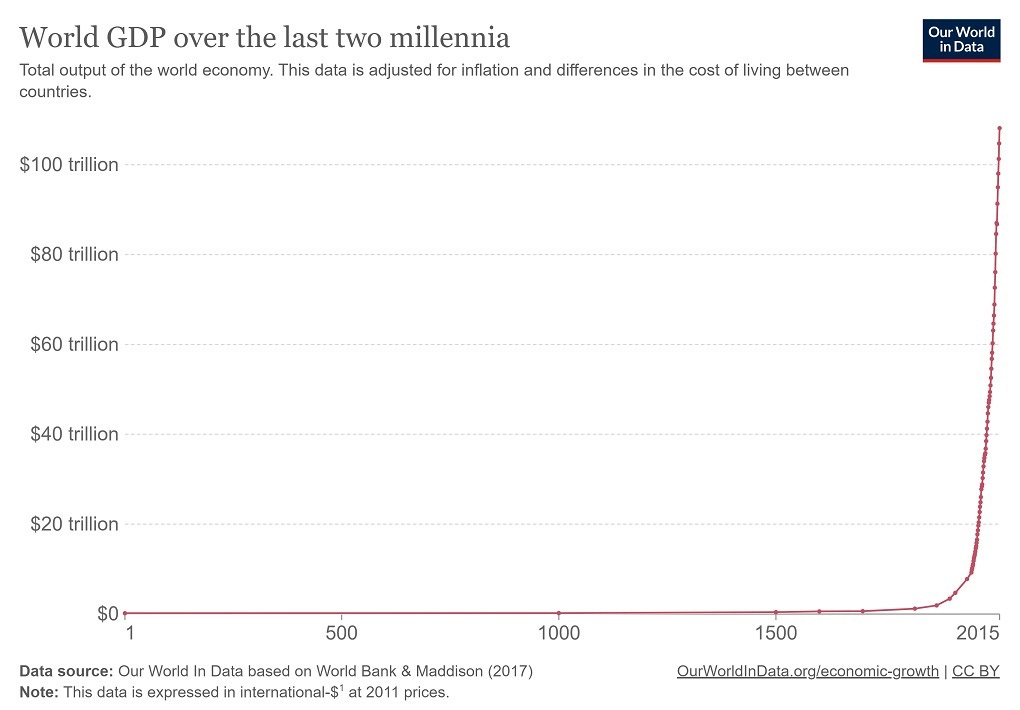

The idea of increasing humanity’s collective set of choices is closely related to the economic concept of GDP. GDP is defined by how much people choose to pay, in total, for the things we produce; in other words, it’s just a measure of human choice. It’s not a perfect correspondence, because there’s stuff that GDP leaves out (leisure, inequality, and so on). But it’s pretty closely related. This is why a lot of people talk about the benefits of technology by pointing to a graph like this:

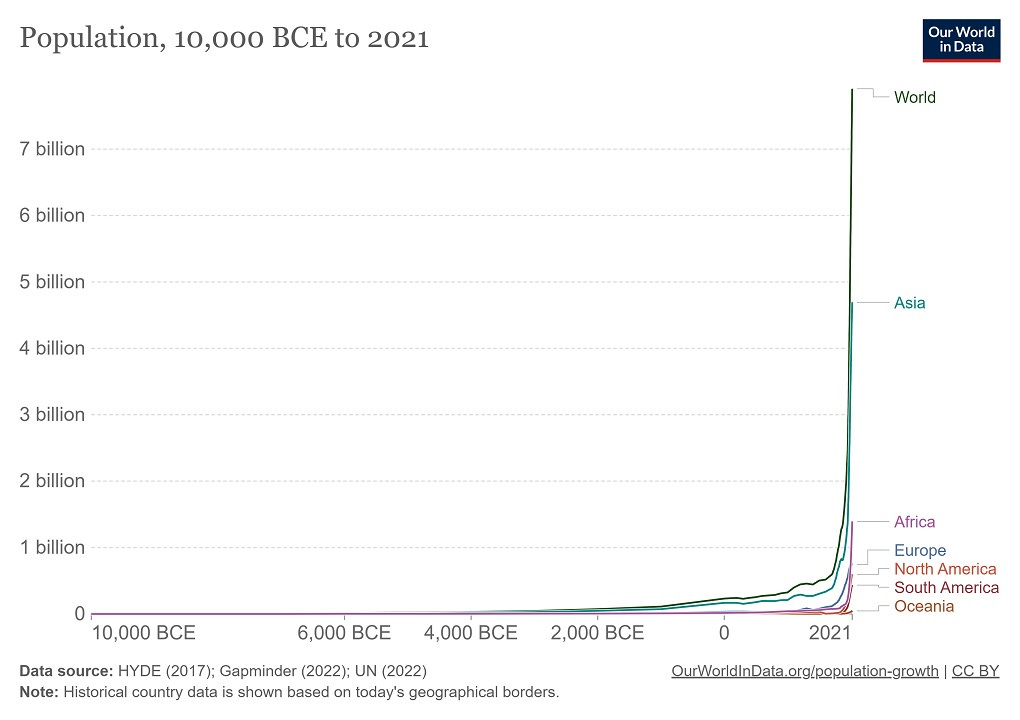

Of course, total GDP represents two things: how much each person pays for stuff, and how many people there are to pay. This is somewhat related to the idea that we can use increased resources either to create more humans, or to increase the standard of living for the existing humans. It’s not the same, since GDP doesn’t equal resource use (something the degrowthers consistently get wrong). But it’s true that increased global GDP has allowed us to increase both human population and living standards.

Both of these represent humanity’s collective choices. And in fact, these choices may change over time. As technology has progressed, humans have chosen to have fewer and fewer kids, and world population looks likely to shrink in the not-too-distant future. If that’s what we decide we want, then so be it.

The main reason I think technological progress is usually good for humanity — why I’m a techno-optimist of the “normative” variety — is that I fundamentally believe that humans should be given as much choice as possible. This isn’t something I can prove with facts — it’s just a moral intuition. I am a humanist. I see human beings wanting things, and I want to give them what they want. This doesn’t always mean humans will be happier when they get what they want; sometimes we make choices that make us unhappy. But as a humanist, I believe my species has the right to choose.

But remember, collective choice is not the same as individual choice. Many choices that societies make go against the choices that individuals make — such as when society throws you in jail for committing a crime. Techno-optimism of the normative, humanist variety requires a belief that on average, over the long run, societies will make choices that result in greater individual choice as well — that some sort of liberalism will eventually prevail. So it’s a bit of a Fukuyaman idea as well. It entails a belief that society — generally, eventually — does what’s right for the individual.

That belief often doesn’t pan out. The USSR invented quite a lot of important technologies, but it impoverished its people in pursuit of an ideology that ultimately proved itself to be anti-humanist. Nor do I think repressive societies inevitably fail — just look at China’s economic dominance combined with increasing totalitarian control. In fact, China’s use of new technology to build a new kind of digital totalitarianism is presenting a stern challenge to my techno-optimism. But I suppose things are still much better there than during the Cultural Revolution or the centuries before.

I also worry that humanity’s collective choices will hurt those who don’t get a voice — namely, animals. We may ultimately become good stewards of the natural world, and there are definite moves in that direction in rich countries. But so far, our expansion of population and production has brought destruction upon most of our fellow animals, because our choices are not the same as their choices. So that’s something that tempers my techno-optimism, and it’s something I think we need to fix.

But anyway, at its core, techno-optimism is a belief that humanity deserves to have more collective control over the Universe. And despite some reservations, overall I do believe that. I would not return the planet to the conditions of the year 1000, or 1800, or 1971. (As for whether I’d return it to 2012, ask me in a few years.)

Why there’s lots of technology left to discover

I’m also a techno-optimist of the “positive” variety — I think we have a lot more useful stuff left to discover. The reason I believe this is because the nature of technology itself discourages humans from discovering it.

Let me explain what that means. When one person makes a scientific discovery or invents a new manufacturing technique or whatever, they usually get some credit for it — a promotion and a raise, or some intellectual recognition. Sometimes they even get a huge monetary reward, if they build a successful company around their innovation. But in general, most of the benefit of a new innovation goes to other people who didn’t spend any effort or money on discovering it. It goes to copycats and “fast followers” who copy or reverse-engineer or steal the new technology. It goes to other innovators who build on that discovery. And so on.

This is called a “positive externality”. In fact, science itself is very close to what economists would call a “pure public good”, except in wartime. And one thing economics teaches us is that public goods are under-provided; the incentive for innovation isn’t as strong as it should be. (And this is true for anything that has a positive externality, not just public goods!) Why should I spend my life in a lab discovering things that someone else will monetize? And so not enough people spend their life in a lab.

(This is what Marc means when he says that technological innovation is “philanthropy”. Obviously, philanthropy is wildly insufficient to solve most social ills, and by the same token, innovation for the love of innovation is wildly insufficient to propel technological progress as fast as it ought to go.)

Our society has developed a number of mechanisms to combat the problem of insufficient innovation. We give innovators patent monopolies to increase their financial reward (though this also harms some innovation by creating patent trolls). We use the government to fund basic research — not just basic science, but applied stuff as well. We give tax credits for corporate R&D.

The reason I’m an optimist about the opportunities for future progress is that I believe these measures are still wildly insufficient. Plausible models suggest that the economic return to increased R&D spending, in terms of increased GDP, is still very high. Which means that if we spent more — or incentivized innovation in other ways — we would discover and invent a lot more stuff, a lot faster.

The evidence that new technologies are getting harder to find is fairly plausible. In the 1600s, one person tinkering in a fairly rudimentary lab could discover fundamental laws of the Universe; that might still be possible now, but it doesn’t happen much these days. Some new fields, like synthetic biology, are somewhat amenable to cheap innovation in a lone hobbyist’s garage, but others, like AI, require vast physical resources in order to push the envelope.

But as new discoveries have gotten more expensive on average, our society has gotten richer, and is better able to afford the increasing expenditures required for continued innovation. That’s why in the short term at least, I’m optimistic that there’s lots left to discover.

Of course, this requires that we get the policies right — the very same externality that assures me that there’s lots left to discover is also the reason why we might not discover it. In particular, there are two things I’m worried about here. First, I worry that political chaos and division in rich countries (especially the U.S.) will make us less willing to fund R&D — already there are worrying signs, such as Congress stalling the science funding of the bipartisan CHIPS Act. And second, I worry that China’s theft of intellectual property has reached such enormous proportions that other countries’ return to innovation has fallen.

So I’m a techno-optimist of the will, but we will need the will, and that’s not a trivial thing to muster.

The “all hands on deck” approach to technological progress

Because sustaining technological progress is so hard, it needs a whole-of-society approach in order to get it right. It’s not something that can be adequately handled only by big corporations, or only by VC-funded startups, or only by universities, or only by the government, etc. etc. These different innovators all focus on different areas of technology and have different incentive structures, so we need them all.

And the implementation of technology requires an even broader set of institutions. It doesn’t matter if green energy technologies get fabulously cheap if we can’t get past NEPA in order to build them. The USSR was first in space, but their space program didn’t really help their people (unlike America’s, which did).

The array of institutions we need to propel technology forward is surprisingly broad. In his manifesto, Marc lists various “enemies” — not people, but ideas and institutions that he thinks restrict the growth of technology. Some of these, like degrowth, belong on the list. But some of his “enemies” aren’t enemies at all, but important institutions that need to be co-opted in the drive for faster innovation. These include:

statism and central planning

bureaucracy

monopolies

I’ve already talked about why important government is for accelerating technological progress, and if you think about this concretely, it’s hard to think of a major technological breakthrough that didn’t involve public-private partnerships. The Covid vaccines and the wave of mRNA innovation they unleashed are only the most recent example, but the Manhattan Project began the nuclear age (and government funding has been crucial for nuclear power), the Human Genome Project launched the genetics revolution, DARPA helped create the internet, and so on. The NSF, the NIH, DARPA, BARDA, NASA, the national laboratories…all have a storied history of propelling forward the march of innovation (often to the U.S.’ great benefit). This is not “central planning” in the traditional Soviet sense, but it’s certainly statist.

As for bureaucracy, this certainly can be harmful to technological progress, but if done right, it can be a great help. For example, look at what NEPA — a law that allows private citizens to tie projects in court for years doing cumbersome paperwork even if they already satisfy all environmental laws — has done to our ability to build physical technology in the United States. The alternative to things like NEPA is a competent, efficient bureaucracy that can quickly determine whether projects satisfy environmental laws, and approve them rapidly if they do. That’s why more libertarians are recognizing the crucial importance of state capacity.

Finally, monopolies can certainly choke off economic activity. But it’s hard to ignore the fact that many of our most important scientific discoveries and technological innovations — including the transistor and generative AI, two essential pillars of the information technology revolution — came from the corporate laboratories of big monopoly companies. Bell Labs’ history of innovation is legendary, and someday Google’s dominance of basic AI research will be legendary as well. Importantly, neither Bell nor Google, monopolies though they were, ended up reaping most of the benefits of the discoveries they made; because they were monopolies, and had a huge cushion of profit with which to fund research, they were able to temporarily ignore the externality problem of technological innovation, acting more like a government lab or a university than a profit-seeking company.

So anyone who thinks that technology is pushed forward solely by scrappy inventors in their garages, while government and big business merely parasitizes off of their labors, is deeply wrong. And anyone who thinks that technology is pushed forward solely by government planners, while the private sector merely parasitizes off of government’s efforts, is also deeply wrong. The externality problem of technology is deep enough and complex enough where society needs an “all hands on deck” approach to innovation — our various institutions have to supplement and complement each other, each pushing the boundaries of technology in a different direction, and working together when applicable.

Society is a UX for technology

A depressingly large number of people seem to see technology and society as fundamentally in competition — a battle between amoral scientists and greedy techbros on one hand, and the forces of social responsibility and the greater good on the other. This worldview sees technologists as fundamentally a type of pirate, sailing the high seas in search of plunder while the navy of social responsibility chases them around.

To my mind, this way of seeing things is wrongheaded and highly counterproductive. The reason is that technology, as I said before, is a fundamentally humanistic enterprise — it increases the collective options available to human societies.

This means two things. First of all, it means that the fundamental purpose of creating new technologies is to empower society. If you’re working on a new cancer drug or a new image recognition AI or a new semiconductor manufacturing process, what you’re doing is giving society more choices — how much more cancer treatment to give people, whether to build better image search systems or implement universal surveillance, how much more compute to manufacture and what to use it for, etc. Which of these choices society makes is up to society; if you’re an innovator, you’re not putting power in your own hands, you’re putting power in society’s hands.

All the famous arguments for scientists and engineers refusing to build certain technologies — nuclear weapons, AI, etc. — were based on the idea that society doesn’t have the ability to make good choices about how to use these things, and that individuals (the scientists and inventors themselves) are better equipped to decide. Once you invent or discover something, how it gets used is out of your hands, so the only way technologists can actually buck the will of society is by refusing to innovate. Innovation requires faith in society, while it’s lack of faith ultimately leads to decelerationist impulses.

The second reason society and technology aren’t separable is that society is itself a technology in the more general sense that I laid out earlier. Specifically, society is the user experience (UX) of other technologies; it determines how individual humans experience the power that technology yields.

To understand this, consider digital surveillance technologies. The United States and China have chosen to use these technologies in very different ways. In the U.S., you’re likely to use webcams to keep track of your pet rabbit, or to film fun videos, or to stream yourself playing video games, or for various other enjoyable pursuits. In China you can do these things as well, but there’s much less privacy when you do them, because the state is always watching. And on top of those individual fun uses, China has employed networked cameras to create a digital panopticon where citizens are never free of the state’s prying eyes. For the country’s minorities, this panopticon is as terrifying as anything out of a George Orwell novel.

In other words, it’s society’s responsibility to use technologies for good instead of for evil. Sometimes this can take a long time to figure out — print media technologies like the printing press caused a lot of disruption and conflict when they were first introduced, and only with time did we learn how to use them responsibly. I expect something similar is happening with social media right now. It took many years to implement the labor regulations that make factory work tolerable instead of hellish, the consumer protections that made meat and cars and air travel safe for the general public, and the environmental regulations that cleaned up our air and water. And along the way our societies made many missteps (e.g. NEPA) that we’re now having to fix.

But although this is a difficult process, it’s an utterly necessary one. Technology’s purpose is to benefit humans (and hopefully someday, animals and other sentient beings as well), and society’s role is to figure out how to distribute those benefits widely. This is, of course, in addition to society’s role in supporting innovation.

In other words, there is no boundary between technology and society. They are part of a single unified entity — one whose scope is even broader than the “technium” itself. They are components of the organism of humankind.

Sustainability is technology

Finally, I’d like to say a word about sustainability. In his manifesto, Marc identifies “sustainability”, in quotes, as one of the enemies of techno-optimism. But while he might be referring to people who cloak degrowth ideas under a false banner of sustainability, actual sustainability is not an enemy of techno-optimism. Indeed, it’s a core part of it. Being able to sustain technological society into the indefinite future is, itself, a fundamental goal of innovation. And the way to accomplish that sustenance is almost always more innovation, not less.

Consider an imaginary simple little example — an economy that’s just a small band of people and a grove of fruit trees that sustains them. The people can’t digest the seeds, so the seeds either get thrown away or pass through their digestive tract and end up fertilizing new fruit trees, and the little cycle sustains itself. But then the people invent a technology that allows them to cook the seeds and digest them. This increases their caloric intake in the short term; if we were measuring GDP and consumption, we’d see both go up. But because cooking the seeds prevents the fruit trees from reproducing, eventually the fruit tries die out and the people go hungry. Oops!

What’s the solution to this problem? Well, one fairly unhappy solution is degrowth; people could simply not cook the seeds, so that they stay in the old equilibrium. But a better solution is more technology. If the people invent a way to intensively cultivate seeds into new trees, they can cook and eat some of the seeds today, and plant the rest in a controlled manner so that they can still sustain the orchard indefinitely. Technology got them into trouble, but eventually technology gets them out of trouble too.

In fact, this kind of innovation is very common, and you can see a pretty close analogy here with fossil fuels. Nature gifted humanity with an abundance of coal, oil, and natural gas, but eventually these fuels get more expensive to extract — and that’s not even considering the impact of climate change. Technology can keep extraction cheap for a while, but eventually that runs out. But if we use the industrial society enabled by fossil fuels to create more stable alternatives — solar power and eventually fusion power — then we’ll be able to sustain and grow that industrial society for far longer than if we stuck with fossil fuels forever.

More generally, technological progress is all about tomorrow being better than today. If there’s no tomorrow, today matters much less; the promise of an incomprehensibly bright future is a major reason we struggle so hard to innovate and push the envelope today. This is a point made forcefully and persuasively by Tyler Cowen’s book Stubborn Attachments. We don’t innovate only for ourselves; we innovate for ourselves and our posterity.

The enemy of techno-optimism isn’t sustainability; it’s short-termism. Humanity should not build new things to pump up quarterly earnings; we should build them so that our descendants, in whatever form they come, will own the worlds and the stars. And if we are to own the worlds and the stars in perpetuity, we must take care not to despoil them, starting with the one we now sit upon.

Techno-optimism is thus much more than an argument about the institutions of today or the resource allocations of today. It’s a faith in humanity — and all sentient beings — propelling ourselves forward into the infinite tomorrow.

I think there are two very different definitions of techno-optimism you and Marc are using. You're talking about the need to invest in new technologies and that when humanity has problems, the only way forward is new technology and innovation, and that all of our society should be focused on creation and exploration, not stagnation. Okay, I'm on board with that. Just tell me what to Venmo you for the ticket for that passage. You definitely have a much healthier, more inclusive vision.

However, that's not at all the techno-optimism of Silicon Valley which is being ridiculed by those of us in the technical field, or the tech press familiar with people like Andreessen and Musk. In their mind, a perfect world is ran by technology they own, and which is maintained by their indentured servants.

For example, on the one hand, Musk advocated settling Mars. It would be very difficult but an interesting, and long term, beneficial project. But he callously and casually says "yeah, a lot of people will die" and wants to offer loans for the flight to the red planet on his BFRs, loans which you'd pay off working for him. His version of techno-optimism is that technology will make life better. For him and his friends. If it just so happens to benefit the peasants too, cool beans. Meanwhile he's off ot promote neo-Nazi blue checks and tweet right wing outrage bait and conspiracy theories.

Likewise, Andreessen's worship of AI is rooted in Singularitarianism and Longtermism, he just hides it behind the "e/acc" banner in the same way Scientology hides Xenu behind offering to help with time management skills and annoying tics. He demands to have absolutely no controls, safeguards, or discussions against AI because he thinks that AI emulated how humans think (it does not), can be infinitely advanced (it cannot), and will at some magical point reach parity with humans in every single possible dimension (for stupid reasons involving a guy named Nick Bostrom), and he will then be able to upload his mind to a computer and live forever as digital oligarch (also for stupid reasons, these ones involving a guy named Ray Kurzweil).

If we say "wait, hold on, how can we train AI to benefit the world better," or "let's figure out IP laws around training vast AI models," he has a conniption because according to e/acc tenets, we are violating the march of progress because any interference with technology today might mean that a critical new technology or model isn't invented in the future, like the evil Butterfly Effect. That's the crux of e/acc in the end. The future matters. So much so that it's okay to sacrifice today and rely on humanity pulling itself from the brink an apocalyptic crisis even though it's stupid, expensive, and will cost many lives.

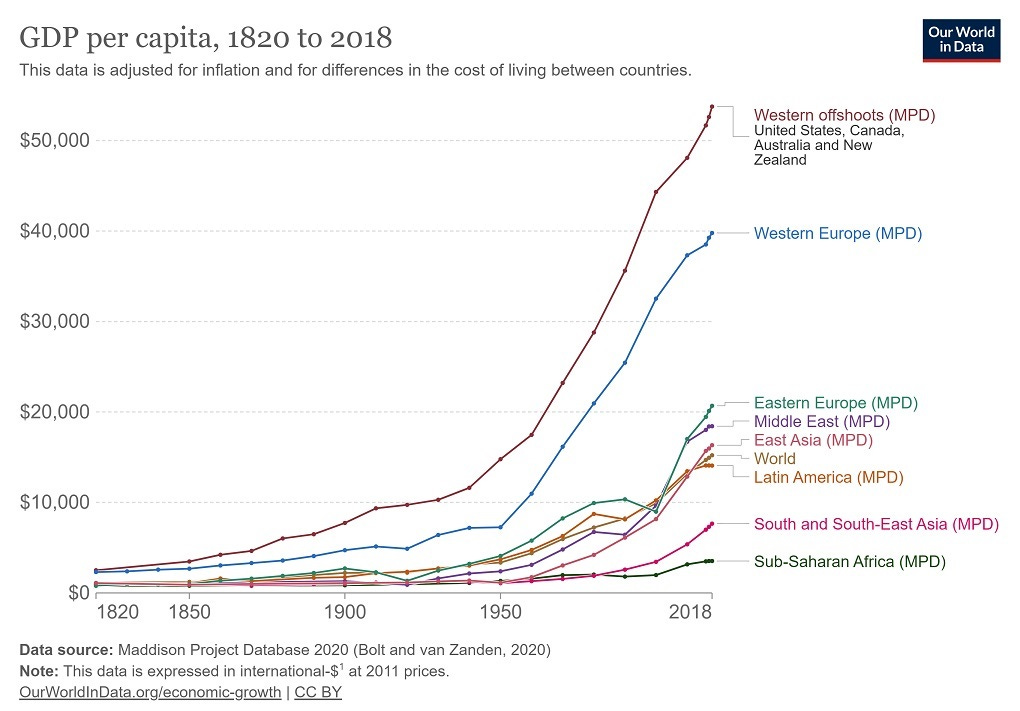

Finally, I feel like I have to point out that exponentially growing GDPs are great, but vast amounts of that wealth have ended up in very few hands. Unless people are going to be able to fully participate in the future and benefit from technology to make their lives legitimately easier, instead of working two jobs and a side hustle to maybe barely afford rent while buying is a deranged fantasy they don't even allow themselves to entertain anymore... Well, I've seen those movies. They don't end well.

But Marc is insisting that GDP growth can be infinite and so are the benefits, so we better get on his path of infinite trickle-down or he'll keep raging that the poors are spoiling his dream future of digital godhood because Singularitarians believe that technological advancement is the only thing that matters because it's exponential, and now also think that exponential development curve is tied to GDP. It's those broken, vicious, and inhuman ideas, along with his entitled self-righteous tone that are being picked apart and ridiculed. If he was genuinely interested in uplifting humanity instead of having a tantrum after a crypto blowout, we would take him more seriously.

Meanwhile, I read Andreessen's screed and am imagining us following down his path into the cramped, sweaty, natalist fleshpit of The World Within.

I'm totally on board with techno-optimism. Capitalism and technological progress have been the two biggest drivers of a better standard of life for humanity (although I have to note that capitalism is pretty bad at distributing the gains).

As a society, it seems like we're still pretty immature in our thinking about the risks and externalities of new technology. In my opinion, too many people are either going full booster like Andreessen and ignoring any downsides, or full naysayer and making claims like a modest amount of misinformation on social media being one of society's major ills. People seem to have the hardest time taking costs and benefits into account. We also seem to have lost sight of material progress in particular. The most important thing we can do for people is to make sure they have food, housing, healthcare, and so on. But we seem to keep taking our eye off the ball and worrying about things like relative status.