Some simple lessons from China's big AI breakthrough

Preventing LLM technology from spreading is a futile effort, but export controls can still work.

A Chinese company called DeepSeek recently released a series of large language models that are just about as good as the ones made by American companies like OpenAI and Anthropic, but cost less to make. Within much of the media and the tech community, this has been treated as an epochal event — a “Sputnik moment” for the AI race between the U.S. and China.

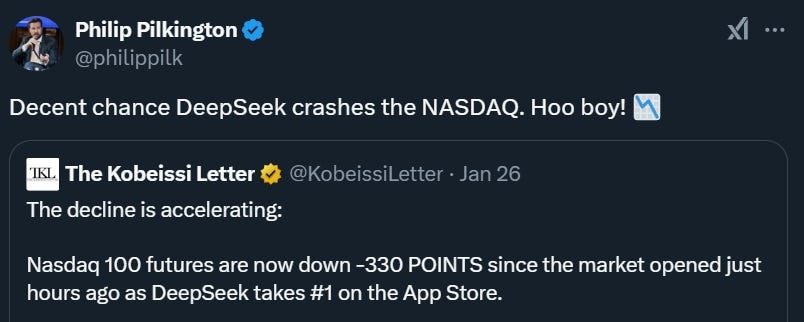

As with the L.A. wildfires three weeks ago, DeepSeek has led to an explosion of hand-wringing, shouting, bad takes, and other social media histrionics. Some people breathlessly proclaimed an imminent U.S. stock market collapse:

In reality, the Nasdaq is up about 25% for the year, and is at a higher level than it was two weeks ago:

Nvidia stock did take a hit from the news. Nvidia sells GPUs, the chips that are used to train AI models. DeepSeek shows that AI models can be trained using fewer GPUs; this caused many investors to predict that Nvidia’s chips will be less needed going forward. Because Nvidia is one of the world’s most valuable companies, any significant drop will look big in dollar terms. But the company’s stock is only down to where it was last October; over the past year, as of this writing, it’s still up over 90%:

In fact, as many people have already pointed out, there’s a simple reason why more efficient ways of creating LLMs could actually increase demand for Nvidia’s chips. When something gets cheaper, people buy more of it. So if LLMs become cheaper to produce, people will rent LLMs for more tasks. This will require purchasing more chips — especially since newer models use lots of compute for inference (i.e., “thinking” about the answer to each question).1

Whether demand for Nvidia’s chips goes up or down depends on whether the increase in efficiency outweighs the resulting increase in demand for LLMs. It’s certainly true that previous increases in efficiency — which have been enormous — don’t seem to have harmed Nvidia’s valuation much.

Other people declared that DeepSeek would supercharge the global economy. A top macroeconomist declared that the Chinese LLM release would prove more important than the invention of electricity or the internal combustion engine:

Obviously it’s not possible to prove that statement wrong, but it seems highly unlikely, given that the model’s performance is about the same as that of other models — including other Chinese-made LLMs. The idea that DeepSeek is revolutionary is based on the fact that it can do the same thing for much cheaper. But while the company does seem to have made some real innovations here, Anthropic CEO Dario Amodei points out that they’re roughly in line with previous cost decreases. LLMs are a sector where costs have just been falling very fast — there’s no reason to think that DeepSeek represents such a unique structural break from that trend that we should compare it to the invention of fire or the wheel.

Finally, there were lots and lots of takes about U.S.-China competition. DeepSeek probably does have some relevance there, but the apocalyptic tone of much of the commentary here was overblown. There was a flurry of articles with titles like “Does China's DeepSeek Mean U.S. AI Is Sunk?”, while one prominent AI commentator declared that Facebook had sold out America by making its AI open-source:

This is a bit silly,2 because DeepSeek itself is also open-source; if Facebook undermined America’s “advantage” in AI, then DeepSeek itself is undermining China’s. A more reasonable conclusion, as I’ll argue, is that there isn’t really that much national advantage here to undermine — keeping this sort of model proprietary is just not really as useful or feasible as in other areas of technology.

In addition to all of these overblown takes, there were lots of serious debates and speculation about DeepSeek. These included questions about what DeepSeek’s key technological innovations are, which chips it actually used to train its models, how much that training actually cost, whether and how DeepSeek evaded U.S. export controls, and so on. Not being an AI expert, I’m not qualified to evaluate those interesting debates.

But even without answering those tough questions, I think that there are some pretty obvious lessons a calm, rational outside observer can learn here, just from the fact that a random Chinese company managed to produce a frontier-level open-source LLM. As I see it, the key early takeaways are:

LLMs don’t have very much of a “moat” — a lot of people are going to be able to make very good AI of this type, no matter what anyone does.

The idea that America can legislate “AI safety” by slowing down progress in the field is now doomed.

Competing with China by denying them the intangible parts of LLMs — algorithmic secrets and model weights — is not going to work.

Export controls actually are effective, but China will try to use the hype over DeepSeek to give Trump the political cover to cancel export controls.