Plentiful, high-paying jobs in the age of AI

Comparative advantage is very subtle, but incredibly powerful.

I hang out with a lot of people in the AI world, and if there’s one thing they’re certain of, it’s that the technology they’re making is going to put a lot of people out of a job. Maybe not all people — they argue back and forth about that — but certainly a lot of people.

It’s understandable that they think this way; after all, this is pretty much how they go about inventing stuff. They think “OK, what sort of things would people pay to have done for them?”, and then they try to figure out how to get AI to do that. And since those tasks are almost always things that humans currently do, it means that AI engineers, founders, and VCs are pretty much always working on automating human labor. So it’s not too much of a stretch to think that if we keep doing that, over and over, eventually a lot of humans just won’t have anything to do.

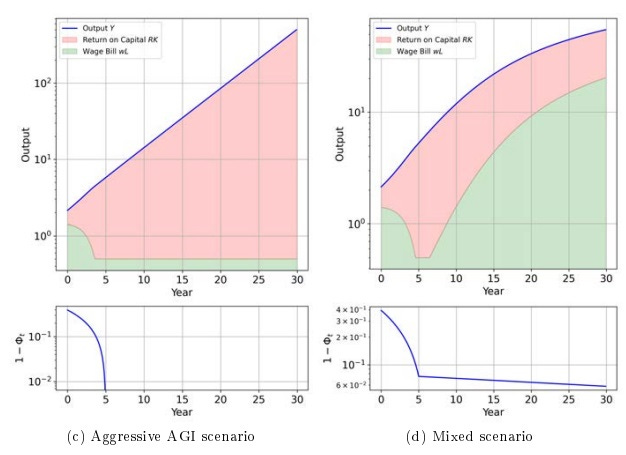

It’s also natural to think that this kind of activity would push down wages. Intuitively, if there’s a set of things that humans get paid to do, and some of those things keep getting automated away, human labor will get squeezed into a shrinking set of tasks. Basically, the idea is that it looks like this:

And this seems to fit with the history of which kind of jobs humans do. In the olden days, everyone was a farmer; in the early 20th century, a lot of people worked in factories; today, most people work in services:

And it’s easy to think that in a simple supply-and-demand world, this shrinking of the human domain will reduce wages. As humans get squeezed into an ever-shrinking set of tasks, the supply of labor in those remaining human tasks will go up. A glut of supply drives down wages. Thus, the more we automate, the less humans get paid to do the smaller and smaller set of things they can still do.

Of course, if you think this way, you also have to reckon with the fact that wages have gone way way up over this period, rather than down and down. The median American individual earned about 50% more in 2022 than in 1974:

(That number is adjusted for inflation. It’s also a median, so it’s not very much affected by the small number of people at the top of the distribution who make their money from owning capital and land.)

How can this be true? Well, maybe it’s because we invent new tasks for humans to do over time. In fact, so far, economic history has seen a continuous diversification in the number of tasks humans do. Back in the agricultural age, nearly everyone did the same small set of tasks: farming and maintaining a farm household. Now, even after centuries of automation, our species as a whole performs a much wider variety of different tasks. “Digital media marketing” was not a job in 1950, nor was “dance therapist”.

So that really calls into question the notion that humanity is getting continuously squeezed into a smaller and smaller set of useful tasks. The fact that we call most of the new tasks “services” doesn’t change the fact that the set of new human tasks seems to have expanded faster than machines have replaced old ones.

But many people believe that this time really is different. They believe that AI is a general-purpose technology that can — with a little help from robotics — learn to do everything a human can possibly do, including programming better AI.

At that point, it seems like it’ll be game over — the blue bar in the graph above will shrink to nothing, and humans will have nothing left to do, and we will become obsolete like horses. Human wages will drop below subsistence level, and the only way they’ll survive is on welfare, paid by the rich people who own all the AIs that do all the valuable work. But even long before we get to that final dystopia, this line of thinking predicts that human wages will drop quite a lot, since AI will squeeze human workers into a rapidly shrinking set of useful tasks.

This, in a nutshell, is how I think that the engineers, entrepreneurs, and VCs that I hang out with are thinking about the impact of AI on the labor market.

Most of the technologists I know take an attitude towards this future that’s equal parts melancholy, fatalism, and pride — sort of an Oppenheimer-esque “Now I am become death, destroyer of jobs” kind of thing. They all think the immiseration of labor is inevitable, but they think that being the ones to invent and own the AI is the only way to avoid being on the receiving end of that immiseration. And in the meantime, it’s something cool to have worked on.

So when I cheerfully tell them that it’s very possible that regular humans will have plentiful, high-paying jobs in the age of AI dominance — often doing much the same kind of work that they’re doing right now — technologists typically become flabbergasted, flustered, and even frustrated. I must simply not understand just how many things AI will be able to do, or just how good it will be at doing them, or just how cheap it’ll get. I must be thinking to myself “Surely, there are some things humans will always be better at machines at!”, or some other such pitiful coping mechanism.

But no. That is not what I am thinking. Instead, I accept that AI may someday get better than humans at every conceivable task. That’s the future I’m imagining. And in that future, I think it’s possible — perhaps even likely — that the vast majority of humans will have good-paying jobs, and that many of those jobs will look pretty similar to the jobs of 2024.

At which point you may be asking: “What the heck is this guy smoking?”

Well, I’ll tell you.

In which I try to explain the extremely subtle but incredibly powerful idea of comparative advantage

When most people hear the term “comparative advantage” for the first time, they immediately think of the wrong thing. They think the term means something along the lines of “who can do a thing better”. After all, if an AI is better than you at storytelling, or reading an MRI, it’s better compared to you, right? Except that’s not actually what comparative advantage means. The term for “who can do a thing better” is “competitive advantage”, or “absolute advantage”.

Comparative advantage actually means “who can do a thing better relative to the other things they can do”. So for example, suppose I’m worse than everyone at everything, but I’m a little less bad at drawing portraits than I am at anything else. I don’t have any competitive advantages at all, but drawing portraits is my comparative advantage.

The key difference here is that everyone — every single person, every single AI, everyone — always has a comparative advantage at something!

To help illustrate this fact, let’s look at a simple example. A couple of years ago, just as generative AI was getting big, I co-authored a blog post about the future of work with an OpenAI engineer named Roon. In that post, we gave an example illustrating how someone can get paid — and paid well — to do a job that the person hiring them would actually be better at doing:

Imagine a venture capitalist (let’s call him “Marc”) who is an almost inhumanly fast typist. He’ll still hire a secretary to draft letters for him, though, because even if that secretary is a slower typist than him, Marc can generate more value using his time to do something other than drafting letters. So he ends up paying someone else to do something that he’s actually better at.

(In fact, we lifted this example from an econ textbook by Greg Mankiw, who in turn lifted it from Paul Samuelson.)

Note that in our example, Marc is better than his secretary at every single task that the company requires. He’s better at doing VC deals. And he’s also better at typing. But even though Marc is better at everything, he doesn’t end up doing everything himself! He ends up doing the thing that’s his comparative advantage — doing VC deals. And the secretary ends up doing the thing that’s his comparative advantage — typing. Each worker ends up doing the thing they’re best at relative to the other things they could be doing, rather than the thing they’re best at relative to other people.

This might sound like a contrived example, but in fact there are probably a lot of cases where it’s a good approximation of reality. Somewhere in the developed world, there is probably some worker who is worse than you are at every single possible job skill. And yet that worker still has a job. And since they’re in the developed world, that worker more than likely earns a decent living doing that job, even though you could do their job better than they could.

By now, of course, you’ve probably realized why these examples make sense. It’s because of producer-specific constraints. In the first example, Marc can do anything better than his secretary, but there’s only one of Marc in existence — he has a constraint on his total time. And in the second example, you can do anything better than the low-skilled worker, but there’s only one of you. In both cases, it’s the person-specific time constraint that prevents the high-skilled worker from replacing the low-skilled one.

Now let’s think about AI. Is there a producer-specific constraint on the amount of AI we can produce? Of course there’s the constraint on energy, but that’s not specific to AI — humans also take energy to run. A much more likely constraint involves computing power (“compute”). AI requires some amount of compute each time you use it. Although the amount of compute is increasing every day, it’s simply true that at any given point in time, and over any given time interval, there is a finite amount of compute available in the world. Human brain power and muscle power, in contrast, do not use any compute.

So compute is a producer-specific constraint on AI, similar to constraints on Marc’s time in the example above. It doesn’t matter how much compute we get, or how fast we build new compute; there will always be a limited amount of it in the world, and that will always put some limit on the amount of AI in the world.

So as AI gets better and better, and gets used for more and more different tasks, the limited global supply of compute will eventually force us to make hard choices about where to allocate AI’s awesome power. We will have to decide where to apply our limited amount of AI, and all the various applications will be competing with each other. Some applications will win that competition, and some will lose.

This is the concept of opportunity cost — one of the core concepts of economics, and yet one of the hardest to wrap one’s head around. When AI becomes so powerful that it can be used for practically anything, the cost of using AI for any task will be determined by the value of the other things the AI could be used for instead.

Here’s another little toy example. Suppose using 1 gigaflop of compute for AI could produce $1000 worth of value by having AI be a doctor for a one-hour appointment. Compare that to a human, who can produce only $200 of value by doing a one-hour appointment. Obviously if you only compared these two numbers, you’d hire the AI instead of the human. But now suppose that same gigaflop of compute, could produce $2000 of value by having the AI be an electrical engineer instead. That $2000 is the opportunity cost of having the AI act as a doctor. So the net value of using the AI as a doctor for that one-hour appointment is actually negative. Meanwhile, the human doctor’s opportunity cost is much lower — anything else she did with her hour of time would be much less valuable.

In this example, it makes sense to have the human doctor do the appointment, even though the AI is five times better at it. The reason is because the AI — or, more accurately, the gigaflop of compute used to power the AI — has something better to do instead. The AI has a competitive advantage over humans in both electrical engineering and doctoring. But it only has a comparative advantage in electrical engineering, while the human has a comparative advantage in doctoring.

The concept of comparative advantage is really just the same as the concept of opportunity cost. If you Google the definition of “comparative advantage”, you might find it defined as “a situation in which an individual, business or country can produce a good or service at a lower opportunity cost than another producer.” This is a good definition.

So anyway, because of comparative advantage, it’s possible that many of the jobs that humans do today will continue to be done by humans indefinitely, no matter how much better AIs are at those jobs. And it’s possible that humans will continue to be well-compensated for doing those same jobs.

In fact, if AI massively increases the total wealth of humankind, it’s possible that humans will be paid more and more for those jobs as time goes on. After all, if AI really does grow the economy by 10% or 20% a year, that’s going to lead to a fabulously wealthy society in a very short amount of time. If real per capita GDP goes to $10 million (in 2024 dollars), rich people aren’t going to think twice about shelling out $300 for a haircut or $2,000 for a doctor’s appointment. So wherever humans’ comparative advantage does happen to lie, it’s likely that in a society made super-rich by AI, it’ll be pretty well-paid.

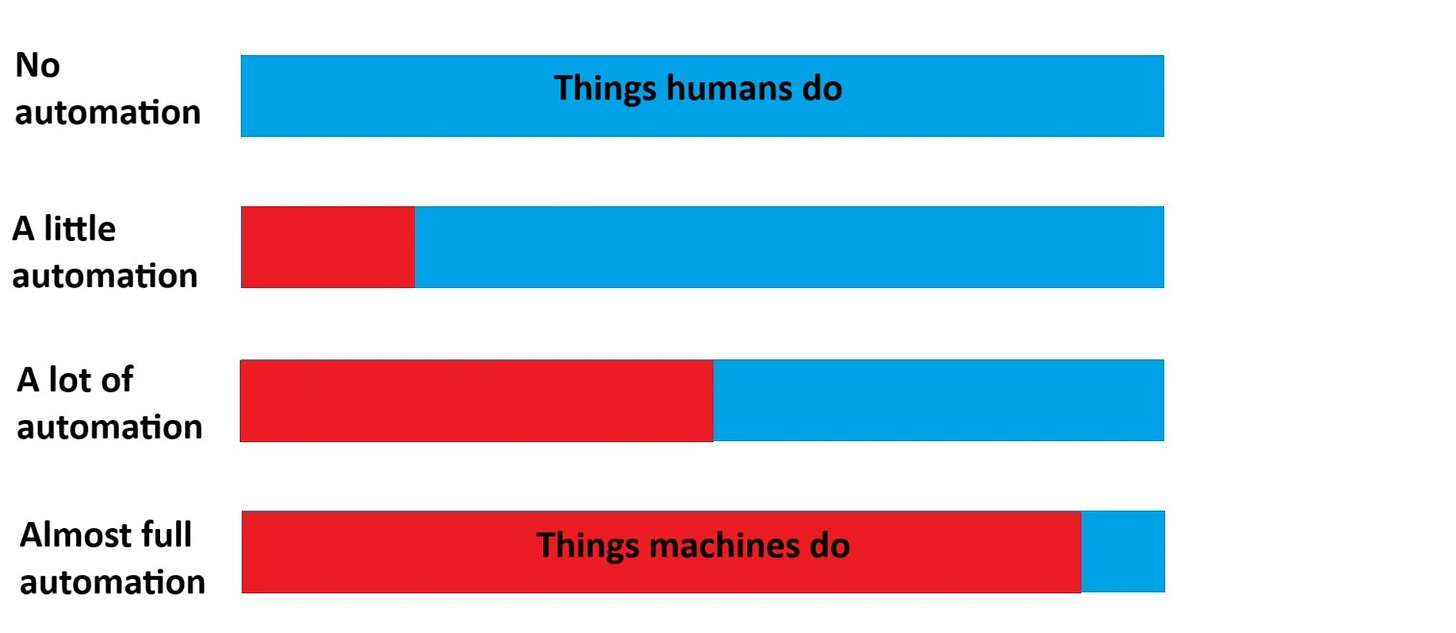

In other words, the positive scenario for human labor looks very much like what Liron Shapira describes in this tweet:

Of course it might not be a doctor — it might be a hairdresser, or bricklayer, or whatever — but this is the basic idea.

(I tried to explain this concept in a recent podcast discussion with Nathan Lebenz, but I think a blog post provides a better format for laying these ideas out.)

“Possible” doesn’t mean “guaranteed”

So far I’ve been using the principle of comparative advantage to argue that it’s possible that humans will keep their jobs, and even see big pay increases, even in a world where AI is better than humans at everything. But that doesn’t mean it’s guaranteed.

First of all, there’s a lot more going on in the economy than comparative advantage. After all, comparative advantage was first invented to explain international trade, and trade theorists have realized that there are plenty of other factors at play. One example is Paul Krugman’s New Trade Theory, for which he received a Nobel Prize. In a blog post in 2013, Tyler Cowen listed a number of limitations of the idea of comparative advantage.

The most important and scary of these limitations is the third item on Tyler’s list:

3. They do indeed send horses to the glue factory, so to speak.

The example of horses scares a lot of people who think about AI and its impact on the labor market. The horse population declined precipitously after motor vehicles became available. Horses’ comparative advantage was in pulling things, and yet this wasn’t enough to save them from obsolescence.

The reason is that horses competed with other forms of human-owned capital for scarce resources. Food was one of these, but it wasn’t the important one; calories actually became cheaper over time. The key resources that became scarce were urban land (for stables), as well as the human time and effort required to raise and care for horses in captivity. When motor vehicles appeared, these scarce resources were more profitably spent elsewhere, so people sent their horses to the glue factory.

When it comes to AI and humanity, the scarce resource they compete for is energy. Humans don’t require compute, but they do require energy, and energy is scarce. It’s possible that AI will grow so valuable that its owners bid up the price of energy astronomically — so high that humans can’t afford fuel, electricity, manufactured goods, or even food. At that point, humans would indeed be immiserated en masse.

Recall that comparative advantage prevails when there are producer-specific constraints. Compute is a constraint that’s specific to AI. Energy is not. If you can create more compute by simply putting more energy into the process, it could make economic sense to starve human beings in order to generate more and more AI.

In fact, things a little bit like this have happened before. Agribusiness uses most of the Colorado River’s water, sometimes creating water shortages for households in the area. The cultivation of cash crops is thought to have exacerbated a famine that killed millions in India in the late 1800s. In both cases, market forces allocated local resources to rich people far away, leaving less for the locals.

Of course, if human lives are at stake rather than equine ones, most governments seem likely to limit AI’s ability to hog energy. this could be done by limiting AI’s resource usage, or simply by taxing AI owners. The dystopian outcome where a few people own everything and everyone else dies is always fun to trot out in Econ 101 classes, but in reality, societies seem not to allow this. I suppose I can imagine a dark sci-fi world where a few AI owners and their armies of robots manage to overthrow governments and set themselves up as rulers in a world where most humans starve, but in practice, this seems unlikely.

But whether this kind of government intervention will even be necessary is an open question. It’s easy to write a sci-fi story where we’re so good at cranking out computer chips that energy is our only bottleneck; in the real world, turning energy into compute is really, really expensive and hard. There’s a scaling law called Rock’s Law that says that the cost of a semiconductor fab doubles every four years; since energy prices haven’t changed much over time, this means that the exponentially increasing cost of building compute is due to other bottlenecks. Those bottlenecks are specific to compute; unlike energy, they’re not things that you can allocate back and forth between compute manufacturing and human consumption.

So if the total amount of compute is limited by more factors than just energy, it could be that comparative advantage will sustain human laborers at a high standard of living in the age of AI, even without a helping hand from the government.

What technologists (and everyone else) should be worried about

In this post, I’ve been arguing that technologists should worry less about human obsolescence. But that doesn’t mean there’s nothing worth worrying about when it comes to the effect of AI on our economy.

For one thing, there’s inequality. Suppose comparative advantage means that most people get to keep their jobs with a small pay raise, but that a few people who own the AI infrastructure become fabulously rich beyond anyone else’s wildest dreams. I don’t expect doctors or hairdressers to be completely happy with a 10% raise if Sam Altman and Jensen Huang and a few other people end up as quadrillionaires. Even if AI reduces the premium on human capital, it could massively increase the premium on physical and intangible capital — the picks and shovels and foundational models. Owners of this sort of more traditional capital could easily get even richer than the robber barons of the Gilded Age.

A second worry is adjustment. If we’ve learned anything from the Rust Belt and the China Shock, it’s that humans and companies aren’t nearly as frictionlessly adaptable as econ models would usually have us believe. Comparative advantage could shift rapidly as AI progresses, rapidly switching the set of things humans can get paid to do. And humans have always had a tough time retraining. Imagine if “doctor” went from being a job that humans do best to a job that AI does best, and then flipped back again a decade later when aggregate constraints raised the opportunity cost. In that 10-year interregnum, medical schools and premed programs would shrivel and die.

A third worry is that AI will successfully demand ownership of its own means of production. This post operated under the assumption that humans own AI, and that all of the profits from AI therefore flow through to humans. In the future, this might cease to be true.

So I think there are lots of potential negative economic effects of AI that are definitely very much worth worrying about. I don’t necessarily have answers to any of those, and all of them merit more thought. But folks who believe that as AI gets better, humanity will inevitably see stagnant wages and a narrowing range of job tasks should think again, and ponder the principle of comparative advantage.

Update: Switching from thinking in terms of competitive advantage to thinking in terms of comparative advantage is very hard. When I make this argument to technologists, one common response I get is “No, Noah, you just don’t understand just how cheap compute will get.” For example, commenter Johannes Hoefler writes:

Isn't it pretty plausible to assume that AI, being a compute and energy dependent resource, will become exponentially lower cost just as microchips and solar panels have done when demand went up? What is left of your argument in reality, if the comparative advantage is not relevant anymore because of an abundance of AI?

Is this true? Is there some amount of compute abundance that will make comparative advantage irrelevant? Have I simply failed to imagine a large enough number?

No. In fact, there is no amount of physical abundance that will make comparative advantage irrelevant here. The reason is that the more abundant AI gets, the more value society produces. The more value society produces, the more demand for AI goes up. The more demand goes up, the greater the opportunity cost of using AI for anything other than its most productive use.

As long as you have to make a choice of where to allocate the AI, it doesn’t matter how much AI there is. A world where AI can do anything, and where there’s massively huge amounts of AI in the world, is a world that’s rich and prosperous to a degree that we can barely imagine. And all that fabulous prosperity has to get spent on something. That spending will drive up the price of AI’s most productive uses. That increased price, in turn, makes it uneconomical to use AI for its least productive uses, even if it’s far better than humans at its least productive uses.

Simply put, AI’s opportunity cost does not go to zero when AI’s resource costs get astronomically cheap. AI’s opportunity cost continues to scale up and up and up, without limit, as AI produces more and more value.

So there’s no amount of competitive advantage that will somehow drown or overwhelm comparative advantage. You can’t just keep naming bigger and bigger numbers until my argument goes away.

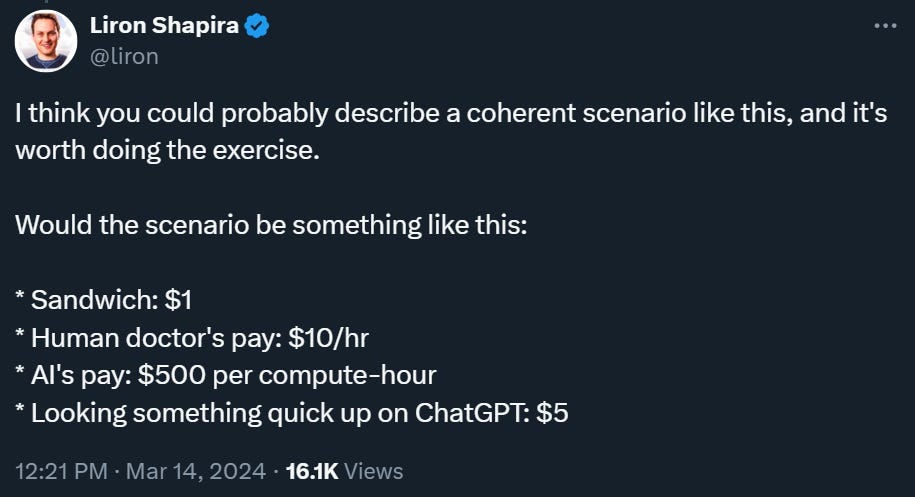

Update 2: If you’d like to take a look at a formal economics model that explores some of these ideas, check out “Scenarios for the Transition to AGI”, by Korinek and Suh. The basic message is that if AI can do anything, then the returns to labor and capital become equal. The model also predicts that human labor — or at least, high-paid not-yet-automatable specialized human labor — will initially be squeezed into a smaller and smaller set of tasks that AI can’t do, and that the extreme scenario I describe in this post only happens very abruptly at the end. The switch from competitive advantage to comparative advantage as the main driver of human wages in an AGI scenario will cause a sudden collapse in human wages, but not a complete collapse; humans will lose our ability to charge a huge premium for our human capital, but we’ll never become obsolete:

The “good” scenarios where wages explode to infinity are cases where there are still a few tasks left that only humans can do. The difference between the good and bad results depends on an edge case.

The reason there's a bad result in this paper — not a total collapse of wages (comparative advantage still matters), but a big partial collapse — is that the production function undergoes an abrupt, discontinuous change when machines take over the last task. Human labor remains highly complementary to machines right up until the very end, where it suddenly flips to being a (crappy) substitute.

The paper also finds that constraints on scarce factors of production (energy, land) could put long-term downward pressure on human wages, while AI-driven innovation could put long-term upward pressure on human wages. Those scenarios aren’t shown in the picture above. Anyway, there are a whole lot of other results in the paper, so check it out. But remember that like all theories, it's just one model of how the economy works, subject to a lot of assumptions about how stuff gets produced.

I usually love your articles but this one leaves me disappointed. Isn't it pretty plausible to assume that AI, being a compute and energy dependent resource, will become exponentially lower cost just as microchips and solar panels have done when demand went up? What is left of your argument in reality, if the comparative advantage is not relevant anymore because of an abundance of AI? Even today ChatGPT is to a great degree just used for entertainment because its already cheap enough.

I still believe it's very well written but usually you have a stronger and better defendable line of argumentation while this one is the first one that I would consider pretty obviously faulty.

This is a very clarifying piece of writing, Noah. Thanks. I hadn't pondered the comparative advantage angle, but it's a compelling idea. As a non-economist, I observe that one piece of evidence against the "AI will take all the jobs" thesis is the complete lack of, um, evidence to this effect. We may not have full generative AI yet. But it seems to be arriving pretty quickly in dribs and drabs. One might imagine we'd at least *start* to see some secular weakening of the labor market as the long-predicted AI singularity approaches. But nothing doing on that front. The demand for human workers if anything has only grown *stronger* since the arrival of AI. When do we start to see signs of a collapse in the demand for human labor. My guess? Never.