Why it's so hard to fix the information ecosystem

Misinformation is a job; correcting misinformation is a hobby.

“The amount of energy needed to refute bullshit is an order of magnitude bigger than to produce it.” — Alberto Brandolini

RFK Jr., a presidential candidate whom Wikipedia describes as “an American environmental lawyer, politician, writer, and conspiracy theorist”, recently went on Joe Rogan’s show and discussed his anti-vaccine views. This created quite a hubbub in the media, or at least my little corner of it. Medical researcher Peter Hotez lambasted RFK, prompting Rogan to challenge Hotez to debate Kennedy on his show, which Hotez declined. There followed much angry shouting over whether scientists should debate conspiracy theorists or refuse to give them the legitimacy that such a debate might confer.

Personally, I am on Team Debate, and I hereby offer to debate RFK Jr. on Rogan’s show, or anywhere else. I’d also happily debate Rogan on any topic of his choice, just because that would be fun. Also, for the record, Covid vaccines are safe and effective.

But that is not what this post is about. This is a post about America’s information ecosystem, and why it’s so hard to combat misinformation. RFK Jr. isn’t just an antivaxxer — he is a proponent of a practically every popular conspiracy theory in the country. The Twitter user RiverTamYDN has kept a helpful list:

Now, I should mention that RFK Jr. is extremely unlikely to win the Democratic nomination, since his main supporters are right-wingers, and Democrats don’t vote for people like that. But the attention and popularity he has received while putting his conspiracy theories front and center feel like a sign of the times. Americans, in general, are very worried about misinformation:

Nearly all Americans agree that the rampant spread of misinformation is a problem…Most also think social media companies, and the people that use them, bear a good deal of blame for the situation…according to a [late 2021] poll from The Pearson Institute and The Associated Press-NORC Center for Public Affairs Research…Ninety-five percent of Americans identified misinformation as a problem when they’re trying to access important information.

Certainly, worries about rampant misinformation are common in the media and among intellectuals. Personally, I’ve been exposed to a lot more obvious misinformation on Twitter than I ever was before I got on that platform.

Anyway, I have an idea about why our information ecosystem is so difficult to clean up, but first let’s talk about a few theories of why misinformation is rising — if it even is rising at all.

Why misinformation is rising (or is it?)

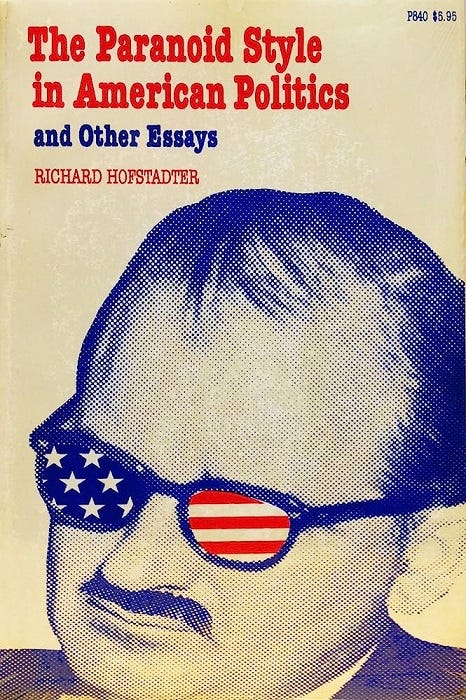

One possibility is that misinformation is not actually increasing, and the trope that it’s increasing is actually misinformation! After all, America has always had a conspiratorial streak to its politics, as described in 1964 by the historian Richard Hofstadter in his famous essay “The Paranoid Style in American Politics”. Maybe today’s wacky beliefs are just continuing this dubious tradition? In fact, there is a little bit of data to support this. A paper by Uscinski et al. (2022) found some evidence that belief in conspiracy theories in America is falling:

Of the 37 conspiracy theory beliefs examined, only six show a significant over time increase, ranging from four to 10 percentage points in magnitude. Of the remaining 31, 16 show no significant change and 15 show a statistically significant decrease. Significant decreases range in magnitude from three to 31 points. The average change across all conspiracy beliefs is −3.84 points. Altogether, the number of conspiracy theory beliefs that increased—six out of 37—is outweighed by the 31 conspiracy theories showing either no change or a significant decrease.

The authors also find little change in Covid-related conspiracy beliefs between 2020 and 2021, and decrease in belief in QAnon.

This result could mean that misinformation is becoming less common, or that its persuasive power is decreasing over time. Alternatively, misinformation might have increased but true information might have increased even more, overpowering the misinformation. Another possibility is that the authors’ polls might have simply had much different sampling methodology than the old national polls that they follow up on, and the finding that the rise in misinformation is misinformation might itself be…well, misinformation.

A second possibility is that both misinformation, and the increased worries about it, are products of the hyper-polarized partisan political climate that America has been in for about a decade now. It’s notable that trust in the mass media has fallen among Republicans and Independents, but not among Democrats:

Meanwhile, most worries about “fake news” from outside the mainstream come from Democrats.

We could therefore just be seeing a bifurcation in the information ecosystem, driven by the well-known phenomenon of education polarization — the educated people who run news outlets like NBC or the New York Times are increasingly liberal (Fox News being the obvious exception), so many conservatives have opted to go create their own parallel media ecosystem on social media, alternative news websites, talk radio, etc. In this bitterly bifurcated situation, each side may simply think the other side’s information is the real “fake news”.

Partisanship may also be contributing to misinformation by using it as a signal of group loyalty. In a post back in 2020, I hypothesized that political movements may require people to loudly proclaim false facts as a test of loyalty (or for you econ people, a costly signal). Or, in the words of Max Fisher, “belonging is stronger than facts”. In fact, there is some evidence for an effect like this. A recent paper by Rathje et al. finds that when you pay people to get things right, some of those partisan differences start to converge:

[A]cross four experiments (n = 3,364), we motivated US participants to be accurate by providing financial incentives for correct responses about the veracity of true and false political news headlines. Financial incentives improved accuracy and reduced partisan bias in judgements of headlines by about 30%, primarily by increasing the perceived accuracy of true news from the opposing party (d = 0.47)…Altogether, these results suggest that a substantial portion of people’s judgements of the accuracy of news reflects motivational factors.

This echoes the results of earlier research.

Yet another hypothesis is availability bias. However much misinformation is or isn’t actually rising, it’s certainly true that our awareness of potential misinformation has been massively increased thanks to social media. In 1996, you would watch your favorite TV news show or read your favorite newspaper or listen to your favorite talk show, and the information you were presented with would rarely be directly challenged. In the age of Twitter, pretty much every factual assertion, whether true or false, gets instantly denied, either by people who know it’s wrong, or by people who simply want it to be wrong.

In other words, every piece of true information and misinformation now gets called “misinformation” by someone. Maybe misinformation was always common before the social media age, and now we’re just more aware of it. Or maybe social media has given a bullhorn to an infinite horde of bad-faith shouters looking to sow doubt for clout. But either way, seeing those allegations over and over and over seems like it could convince a lot of people that misinformation is more common than before.

(By the way, there is a very extreme version of this idea, which is that truth is so complex and so hard to know that the best we can ever hope to do is to establish consensus. If so, then “truth” and “facts” were always just stand-ins for a consensus created by an elite media oligopoly, and now that that oligopoly has been destroyed, there are simply no such thing as truth or facts anymore. I don’t buy this nihilistic worldview, but it’s interesting to think about.)

Finally, there’s the hypothesis that conspiracy theories are replacing organized religion in America. Some people probably need a connection with the ineffable and a sense of mysteries beyond their knowledge, and with church attendance down, some of those people might be turning to things like QAnon or RFK Jr.’s theories.

Anyway, all of the above hypotheses are interesting, and I think it’s likely that more than one holds water. But regardless of whether misinformation is increasing or not, and regardless of the reason why, I think there’s a basic reason why fighting it is an uphill battle.

Brandolini’s Law and the public goods problem

Many people think that Mark Twain said “A lie can travel halfway around the world before the truth can get its boots on.” Well, he didn’t; that’s misinformation. But many other people have expressed this idea throughout the centuries. For example, French economist Frederic Bastiat said:

We must confess that our adversaries have a marked advantage over us in the discussion. In very few words they can announce a half-truth; and in order to demonstrate that it is incomplete, we are obliged to have recourse to long and dry dissertations.

In recent years, this concept has become known as Brandolini’s Law, based on the Twitter quote from Italian programmer Alberto Brandolini that appears at the top of this post.

Frankly, I’m not sure this idea is right. Sure, it’s much easier to utter misinformation than to deliver a careful, well-supported refutation of that misinformation. We have this mental image of conspiracy theorists running around screaming “The world is flat!”, while weary debunkers make hours of video showing how to use geometry to prove it’s round. But having seen quite a lot of debates between conspiracy theorists and debunkers, I don’t think that’s how it usually goes down.

For one thing, hyperlinks exist. If one person has written an extremely detailed, thorough debunking of flat-eartherism, other people can just link to that instead of recapitulating the whole argument.

Second, you don’t have to fully and thoroughly debunk misinformation to combat it; often, a sound bite will do. For example, if someone claims that capitalism leads to mass starvation, you don’t have to write a paper about comparative economic systems and their effect on food insecurity; you can simply respond with a graph of how world hunger has gone down over the last three decades. It takes only a few seconds.

Third, people’s priors might be biased toward the truth. Antivaxxers shouted their heads off for years on blogs and podcasts and social media, and yet about 97% of Americans over the age of 16 got at least one dose of Covid vaccine. It might simply be inherently harder to convince people of B.S. than to convince them of truth. After all, few people can understand vaccine effectiveness or safety studies, but they know that billions of people all over the world have gotten their shots without having heart attacks, and they know the vaccines for polio and measles work, etc.

In any case, I’m willing to believe that combatting misinformation takes much more effort than disseminating it, but I’d like to see some evidence that this is the case.

But there is one other sort of cost asymmetry that I do think favors misinformation. It’s about public vs. private goods.

Some people may spread misinformation as a hobby or a political signaling device, but many people are doing it for personal gain. Antivaxxers have blogs and podcasts to promote. Propagandists serve their nation or movement. Corporate shills are collecting a salary, academic frauds get tenure, and so on. For all of these actors, spreading misinformation is a job rather than a hobby. It confers direct financial and/or reputational benefit.

Debunking misinformation, on the other hand, benefits far more people than the debunker. If you go on Twitter and convince 1000 people that vaccines are OK, you might save a life, but you won’t get paid for it. If a greedy corporation lies about its carcinogenic products, and you go around posting evidence against their lies, and people stop using the products as a result, you still won’t be the main beneficiary. In economics jargon, much of the benefit of debunking misinformation is external — it accrues not to you, but to the innocent bystanders whom you save from being misled.

As any economist knows, this is a big problem. Public goods — or anything with a positive externality attached to it — tend to be underprovided. Because there just aren’t that many incentives in place to combat misinformation, people will tend to do it just as a hobby. That will tilt the balance in favor of the misinformers, some of whom are doing it as a job.

This principle can also apply when private incentives exist but are asymmetric. A pro-Ukraine propagandist can probably make a bit of money, but not as much as a pro-Russian one, because Russia has a lot more money. And debunking propaganda makes little money at all. So the Kremlin’s lies have a natural advantage here.

What this means is that while many of the bad guys are working full time, most of the good guys have to do their work as a labor of love in their off hours. The great disadvantage of the truth is that sometimes it has to be its own reward. And that’s one reason why it’s going to be very very hard to ever excise the “paranoid style” from American politics.

Think this is a typo: “yet about 97% of Americans over the age of 16 got at least one dose of Covid vaccine.” The link says the number is 77% of the population has received at least one dose, not 97%. Accidental misinformation.

The thing about conspiracy theories / misinformaition is that some (a small number) are proved correct: Hunter Biden's laptop, the Lap leak theory, etc. So I am glad you are team-debate. Personally, while I am a pro-vaccine, I am skeptical of the benefit of Covid vaccines for non-vulnerable populations. And I am not alone: lots of people know someone who had severe reaction to the Covid vaccines and we've all know people who are fully vaccinated that have caught and spread Covid and there is plenty of good quality data that supports the idea that the efficacy is limited and the side-effects can be severe. And a 'proof' is to playing back your argument to you: people are comprehensively rejecting the CDC advice to get the bivalent boosters: take up when I last looked was only just over 5%. So on the conspiracy theory that we rushed into adminstering a poor vaccine is well worth the debate. Shame that Hotez is running scared.