Lovecraftian intelligence

Is AI an incomprehensible cosmic horror?

I was going to write about the Fed today, but instead I thought I’d do something a little more fun. So today I’m going to ask the entertaining and silly question: Is AI an incomprehensible cosmic horror out of the pages of an H.P. Lovecraft story?

For those of you who are unfamiliar with H.P. Lovecraft, he was a horror fantasy writer who lived in New England a century ago. His stories often center around humans encountering various incomprehensible monsters and super-beings from outer space or other dimensions, and generally going insane as a result. The most famous of these monsters is the famous octopus-headed bat-winged giant Cthulhu, but there are lots of even wackier entities with names like “Yog-Sothoth”, “Azathoth”, and “Nyarlathotep”. (He was also famously racist; I quit this “Hitler or Lovecraft?” quiz halfway through after getting every question wrong!)

But this post isn’t really about Lovecraft; it’s about AI. Recently, generative AI — as opposed to traditional “analytical” AI — has been getting a huge amount of press in the tech world, prompted by advances in Large Language Models (LLMs) and the release of popular AI art generation apps like Stable Diffusion.

There’s always a lot of discussion about whether and when AI — which already has “intelligence” in its name — will become truly intelligent in the way that humans typically understand the term. When I talk to researchers and engineers in the field, they often talk about the idea of “artificial general intelligence”, or AGI. Exactly what would constitute AGI is up for (vigorous) debate, but in general it means something that’s intelligent in the way that we humans are intelligent. From Wikipedia:

Artificial general intelligence (AGI) is the ability of an intelligent agent to understand or learn any intellectual task that a human being can…

[T]here is wide agreement among artificial intelligence researchers that intelligence is required to do the following:[11]

reason, use strategy, solve puzzles, and make judgments under uncertainty;

represent knowledge, including common sense knowledge;

plan;

communicate in natural language;

and integrate all these skills towards common goals. Other important capabilities include:

output as the ability to act (e.g. move and manipulate objects, change own location to explore, etc.)

Almost all of these skills and behaviors are defined analogously to human skills and behaviors. “Common sense” and “reason” are analogies to human reasoning. “Natural language” is language that sounds human, etc. Tests like the Turing Test are explicitly about whether an AI can sound like a human.

(In fact, this is how humans understand each other’s intelligence too. No one thinks exactly alike, but we model each other by assuming that other people think like we do, and then modeling the differences as small perturbations. But I digress.)

But any sci-fi fan will have spent a bit of time thinking about the possibility of very alien intelligences. There are the Borg in Star Trek, the planetary intelligences of the book Solaris and the movie Avatar, the space gods of A Fire Upon the Deep, the non-sentient aliens in various Peter Watts stories, and so on. All of these imaginary beings are postulated to think in ways that are qualitatively utterly different from the way human beings think.

So I’m wondering: Will the kind of intelligence that humans create with AI be an extremely alien intelligence rather than a human-analogous one? Looking at what AIs are doing — both in generative art and in applications like self-driving cars — I wonder if our AIs will be more like the cosmic horror entities in an H.P. Lovecraft story.

Is AI literally magic?

First, there’s the interesting question of whether AI is fundamentally incomprehensible. As I see it, this is equivalent to the question of whether AI is actually, literally magic.

At this point you may be thinking of the Arthur C. Clarke quote that “any sufficiently advanced technology is indistinguishable from magic”. But this is not what I am talking about at all. A car seems magical to people who have no idea how a car works — but open up the hood and learn about engines, and it becomes clear that a car is mundane. Computer programs can seem magical until you wade through the source code, etc. The “magical”-seeming-ness of most technologies comes from our own ignorance.

There’s a possibility, however, that AI is different. What we call “artificial intelligence” today is basically just a giant statistical regression — an algorithm with a bunch of parameters (hundreds of billions, for recent LLMs) whose values get adjusted when the AI is “trained”. Basically they’re giant correlation machines. As everyone knows, correlation doesn’t equal causation. Which means that when AIs spit out results, we don’t automatically have any idea why they’re getting the results they get.

For this reason, AI is often referred to as a “black box”. We tell it to do something — read written numbers off a page, or predict the next search term you type into Google, or make a robot step over an obstacle, or create a painting based on a prompt, etc. And very often, the AI does what you tell it to do, with a remarkable degree of accuracy and consistency. But unlike a car or a traditional computer program or a spacecraft or a nuclear power plant, you don’t know how the AI did what you wanted.

This is more like the magic spells in a fantasy novel than it is like the hypothetical far-future technologies in a sci-fi novel. Magic spells are incantations that produce powerful results that are (usually) predictable but also ineffable; the wizard knows the prompt to use, and has a good idea of the result they’ll achieve, but the reason that the prompt leads to the result is simply “Well, it’s magic.” In fact, predictable results for fundamentally inexplicable reasons might be a good working definition of true magic.

Of course, we tend not to be satisfied with magical black boxes, so lots of people are at work trying to interpret and/or explain AI. In fact, people are making heroic efforts to figure out some heuristics and principles by which our AI algorithms might be operating. But so far, the capabilities of the AI tools we’re creating have outstripped any human attempt to understand what we’ve created, and despite our scientists’ most heroic efforts, our understanding of how AI works may always remain patchy and indistinct. We just don’t know yet.

(Note that this is also the case with human intelligence, as well as human societies. But let’s not go there today.)

In other words, there’s a chance that AI may always be both powerful and ineffable, like a cosmic entity from a Lovecraft story.

AI is great at horror art

The thing that made me originally think of AI as a Lovecraftian entity was the wave of AI art that has come out in recent months. Here’s a representative example from a Bloomberg article, using the AI art program Dall-E with the prompt “Staff at a magazine coming up with jokes”:

Parts of this art look startlingly photorealistic — the man’s lapels and hair and upper lip, the pages of a sketchbook, etc. Some looks blurry and impressionistic. And other bits simply look downright horrifying. The women’s hands are misshapen and club-like. One woman’s jaw looks smashed, while the other’s mouth gapes impossibly wide like a kuchisake-onna. One of the man’s eyes looks swollen shut as if from injury, while the other is open a tiny bit and staring off at a random angle.

In other words, this looks like a picture of how artists have traditionally imagined Hell — humans distorted into inhumanity, writhing in some unimaginable torment. Or something from a dark sci-fi horror story like Harlan Ellison’s famous “I Have No Mouth, and I Must Scream”.

In fact, this sort of result is pretty par for the course with AI art programs. Try entering the prompt “Staff at a magazine coming up with jokes” into Stable Diffusion, and you’ll get similar images. In fact, there’s a very famous Twitter thread in which an artist playing around with AI discovers unspeakable horrors that all seem to contain the ghostly presence of the same haggard woman with rosacea. I personally suspect that some of the similarity is simply the artist’s own fear-driven apophenia, but the horrifying nature of the images is undeniable.

However good it is at making other kinds of art, AI seems really, really good at making horror art. Naturally, instantly, good at it. Better, in my opinion, than the very best human horror artists. No H.R. Giger painting creeps me out like Stable Diffusion does.

Why is AI so good at freaking humans out? I have a guess, and it has to do with why humans perceive certain things as horrifying.

“Edge cases” and psycho killers

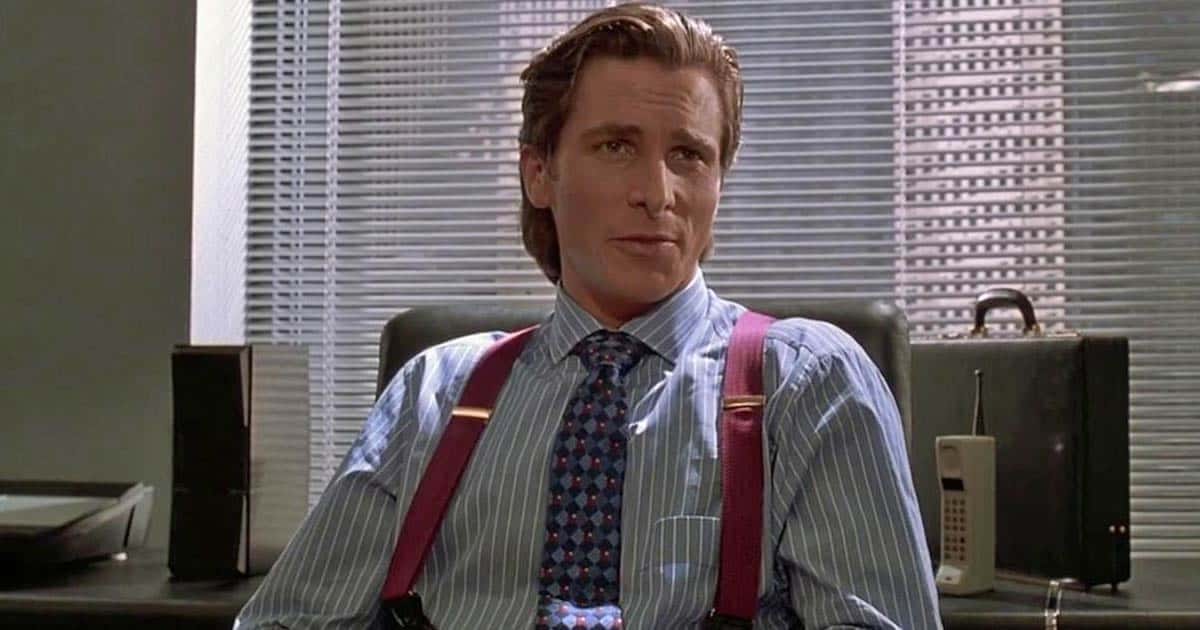

A quintessentially horrifying figure in human fiction is the psycho killer. This is someone who acts perfectly normal and friendly most of the time, then suddenly pulls out an axe and starts murdering people:

Why is this character so horrifying? I would argue that it’s because the psycho killer’s murderous behavior is unusual and unpredictable. Instead of a ravening monster walking down the street chopping people up at all hours, the psycho killer seems just like everybody else — until suddenly he doesn’t.

I would argue that AI art like the picture shown above is horrifying for similar reasons. Instead of simply being a vague, distorted representation like a Picasso painting, it’s a picture that looks photorealistic at a glance, yet upon closer inspection proves to have highly distorted details. The expectation of boring photorealism, just like the expectation that the psycho killer is just a boring businessman, creates horror when it is suddenly and violently violated. (This is similar to the notion of the uncanny valley, but a bit more generalized.)

In fact, this sort of sudden violation of predictable, expected behavior also seems to be a big problem in the field of autonomous vehicles (which are a sort of generative AI). Driverless cars usually perform incredibly smoothly and well, and then in a few cases they do unexpected and very bad things. A recent blog post declares that the tech “generally performs well, occasionally tries to kill you”. This could also describe the protagonist of the movie American Psycho.

These cases of sudden, unpredictable, catastrophic behavior are typically referred to as “edge cases”, and they represent one of the biggest barriers to widespread adoption of autonomous vehicles. They’re typically described as unusual cases that the AI hasn’t encountered before. But every situation and every set of inputs is formally unique; our notion of what is “usual” and what is “unusual” may be very different from an AI’s notion of the same. Or in more nerdy terms, the AIs search space may simply be highly non-convex, for reasons we can’t know because AI a black box, causing it to hit the edges in situations that seem, to humans, to be very similar to the cases the AI is used to.

In other words, we may never get self-driving cars that don’t occasionally, suddenly, inexplicably try to kill you, or AI art that doesn’t occasionally produce little bits of horror.

I must say, I’m not particularly excited to see what edge cases look like for autonomous killer drone swarms. But given the international competition we now find ourselves in with China and Russia, we may ultimately have no choice but to find out.

Lovecraftian intelligence

My concept of a “Lovecraftian intelligence”, therefore, is something along the lines of “a system that behaves like a human-analogous intelligence some or even most of the time, but behaves in catastrophically alien ways in fundamentally unpredictable circumstances.”

(To be honest, only some of Lovecraft’s horrors are like this. Nyartlathotep does fun science exhibitions before he sends you into space to die, Yog-Sothoth has a kid with a weird hillbilly woman before it releases the Great Old Ones back into the world, and so on. But Cthulhu is just a big angry octopus-head who sends out psychic bad vibes. Oh, by the way: Spoilers!)

Lovecraftian intelligences are so alien that we’ll never really be able to resolve the argument over whether they’re truly intelligent or not. But unlike other alien intelligences that display their incomprehensibility at all times, Lovecraftian intelligences trick us most of the time — they seem human right up until they don’t. And this is an inherent property of the way they’re created — in the case of Lovecraft’s horrors, they’re the product of a fantasy author’s twisted mind, and in the case of AIs they’re tools that we create to do our bidding. They are a product of humanity, and this is what allows them to be so creepy to humans.

Anyway, I obviously have no idea if AI will always be a Lovecraftian intelligence. The science of AI explicability and interpretability may advance to the point where we’re able to modify the algorithms in ways that curb the horrifying edge cases. AI generation might remain similar to ineffable spellcasting, or it might not. We may someday invent AGI that behaves in a way much more analogous to human intelligence.

But in any case, if you’re wondering whether all of this has a point or policy implication, the answer is yes: Do not, under any circumstances, no matter what, give AIs control over weapons of mass destruction.

Long story short: The current round of AI is, intellectually, on the level of parlor tricks. There's no intellectual, theoretical, or scientific there there. Really, there isn't. (This is easy for me to say: I have an all-but-thesis in AI from Roger Schank.)

AI, originally, was defined to be a branch of psychology in which computation (the mathematical abstraction) would be used to analyze and theorize about various human capabilities, and computers (real devices) would be used to verify that analisys/resultant theories. But AI grew a monster other half whose goal was to make computers do interesting things. With no intellectual basis or theoretical/academic concerns whatsoever.

It is this other half of the field that has taken over.

Slightly longer story: It turns out that we humans really do think; we actually do logical reasoning about things in the real world quite well. But we (1980s AI types) couldn't figure out how to persuade computers to do this logical reasoning. Simple logic was simply inadequate, and it turns out that people really are seriously amazing. (Animals are far more limited than most people think: there is no animal other than humans, that understands that sex causes pregnancy and pregnancy leads to childbirth. Animals can "understand" that the tiny thing in front of them is cute and needs care, but that realizing that it has a father is not within their intellectual abilities.)

So the field gave up on even trying, and reverted to statistical computation with no underlying models. The dream is that if you can find the statistical relationship, you don't need to understand what's going on. This leaves the whole game at essentially a parlor trick level: when the user thinks that the "object recognition" system has recognized a starfish, the neural net has actually recognized the common textures that occurred in all the starfish images.

So here's the "parlor trick" bit: the magician doesn't actually do the things he claims to do, neither do the AI programs. (The 1970s and 1980s programs from Minsky an Schank and other labs actually tried to do the things they claimed to do.) And just as getting better and better at making silver dollars appear in strange places doesn't lead to an ability to actually make silver dollars, better and better statistics really isn't going to lead to understanding how intelligent beings build quite good and effective models of the world in their heads and reason using those models.

So while AI isn't incomprehensible (it's a collection of parlor tricks), it is an intellectual horror show.

At this point I am willing to give the AI aliens a chance.