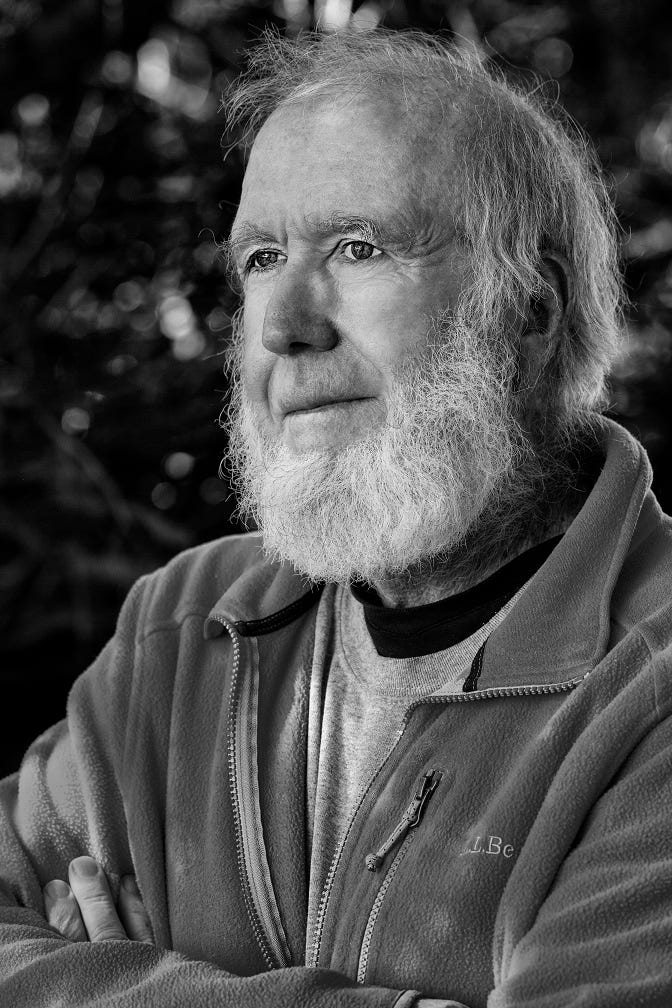

Interview: Kevin Kelly, editor, author, and futurist

A prophet of the tech world shares his thoughts on where it's all headed.

Kevin Kelly is one of the thinkers who helped define the ethos of the tech industry from its early days. As an editor of the Whole Earth Catalog in the 1980s and the founding editor of Wired magazine, he helped to integrate environmentalism and optimistic techno-futurism into a worldview that deeply influenced generations of founders, engineers, and creators. His work is so wide-ranging that it’s hard to sum it up in simple terms (I asked ChatGPT for help, but it could only give me vague generalities). His books and articles are a mix of technological prediction, interpretation 83 of the current zeitgeist, and philosophical exploration. Interestingly, his most recent book, Excellent Advice for Living: Wisdom I Wish I'd Known Earlier, is a book of life advice! His intellectual breadth and ability to synthesize various seemingly unrelated trends and ideas are something to 5 I can only aspire.

Essentially, if you look at the fast-changing world of technology and you ask “Where is this all headed?”, and “Where should this all be headed?”, then Kevin Kelly is a natural person to ask. And in the interview that follows, that is basically what I asked him. I especially focused on his idea of the “technium”, which is all of human technology acting together as a single natural system or organism. We talk about whether this technium exists in competition with Earth’s natural environment, or whether the two can exist in harmony. We also discuss AI, social media crypto, and we talk about whether and how technological development can be actively steered. He also dispenses a bit of helpful life advice.

N.S.: So first let's talk about your new book, Excellent Advice for Living. What made you decide to write a book of life advice?

K.K.: It’s an inadvertent book. Writing a book of advice was never on my bucket list. But I like pithy quotes. When I want to change my own behavior, I need to repeat little behavior-modifying mantras as reminders. I have found that memorable proverbs give me a way to grab hold of lofty advice. So if I can distill a whole book’s worth of advice into a sentence, that gives me the handle for it, to easily bring the lesson forward when needed. With that in mind I started the habit of compressing a lot of useful information into a short memorable tip. Advice is best when directed at a specific person, so I decided to aim my advice at my adult son, who was in his early twenties. Once I started writing tiny bits of advice down for him, I discovered I had a lot to say — as long as I could telegraph it into a tweet. Most of my advice is ancient wisdom, evergreen notions that have been circulating since forever. But I try to put everything into my own words, as few as possible. Most of my writing time on the project was trying to remove words and reduce the advice even further until it is less than 140 characters.

I like an old Irish custom where you give others a present on your birthday. So on my 68th birthday, I gifted 68 short bits of advice to my son, and while I was at it, I shared it with the rest of my extended family and without any expectations, posted it on my blog. The list ricocheted around the internet. So in the following year I started jotting down more adages aimed at my two grown daughters. As I was composing them I kept asking myself a couple of questions: is this advice practical and actionable? Can I stand behind it as true for me? Is this something I wished I had known earlier? If a bit passed these three filters, I’d add it to my list. On my next two birthdays I shared more insights I wished I had known earlier. I must have been getting better because these maxims reached escape velocity and were picked up by bloggers, newsletters and podcasters. They even made it onto the op-ed page of the New York Times.

It’s handy to have blog posts to point to, but I wanted a really easy way to pass these lessons onto a young person or someone young at heart. Thus a small printed book of 450 bits of unsolicited advice that I wished I had known earlier, or Excellent Advice for Living. To be published by Viking/Penguin in May.

N.S.: You've spent much of your life as a writer and editor. So your advice should be particularly relevant for me! What are one or two pieces of advice from the book that I should take to heart?

K.K.: Here are a few I learned the hard way:

Most articles and stories are improved significantly if you delete the first page of the manuscript draft. Immediately start with the action.

Separate the processes of creating from improving. You can’t write and edit, or sculpt and polish, or make and analyze at the same time. If you do, the editor stops the creator. While you write the first draft, don’t let the judgy editor get near. At the start, the creator mind must be unleashed from judgment.

To write about something hard to explain, write a detailed letter to a friend about why it is so hard to explain, and then remove the initial “Dear Friend” part and you’ll have a great first draft.

To be interesting just tell your story with uncommon honesty.

N.S.: Thanks! I will keep those in mind. You're somewhat of a role model for me, since you've managed to weave together surprisingly disparate interests -- technology, environmentalism, foreign cultures -- into a cohesive worldview, mainly through writing and editing, which is something I'd like to do as well. So anyway, let's talk a bit about that. One of your basic ideas is that technology itself makes up a natural system, which you call the technium. When did you first come up with this idea, and what made you think of it?

K.K.: First let me define what I mean by the technium. I call our human made system of all technologies working together, the technium. Each technology can not stand alone. It takes a saw to make a hammer and it takes a hammer to make a saw. And it takes both tools to make a computer, and in today’s factory it takes a computer to make saws and hammers. This co-dependency creates an ecosystem of highly interdependent technologies that support each other. The higher the technologies the more intertwined, complex, and codependent they become. At this point in our evolution we need farmers to support indoor plumbing and plumbing to support banks, and banks to enable farmers, and round and round

You might call this network of technologies “culture” because it is the sum of everything that humans make. But the technium is more than just the sum of everything that is made. It differs from culture in that it is a persistent system with agency. Like all systems, the technium has biases and tendencies toward action, in a way that the term “culture” does not suggest. The one thing we know about all systems is that they have emergent properties and unexpected dynamics that are not present in their parts. So too, this system of technologies (the technium) has internal leanings, urges, behaviors, attractors that bend it in certain directions, in a way that a single screwdriver does not. These systematic tendencies are not extensions of human tendencies; rather they are independent of humans, and native to the technium as a whole. Like any system, if you cycle through it repeatedly, it will statistically favor certain inherent patterns that are embedded in the whole system. The question I keep asking is: what are the tendencies in the system of technologies as a whole? What does the technium favor?

This idea kind of arrived from reading the critics of technology, such as Ted Kaczynski, the Unabomber, or Lewis Mumford, or Langdon Winner. They argued that our human-made artifacts create a deep web of interdependencies which give the technosphere its own agency, and I found their arguments convincing. They see the strength of this system as getting increasingly stronger, with great non-human agency, which I also agree with. But where I depart from the critics is that they are convinced that this network of technologies, this technium, is hostile to both nature and in particular antithetical to us humans, its creators. In fact, in their view, the technium is so antagonistic, and so powerful, yet beyond our control, that we need to dismantle it, or at least diminish it, or unplug it. In the eyes of the Unabomber and other anti-civilizationists, we need to destroy the technium before it destroys us.

On the other hand, I see this technium as an extension of the same self-organizing system responsible for the evolution of life on this planet. The technium is evolution accelerated. A lot of the same dynamics that propel evolution are also at work in the technium. At its core the technium is an ecosystem of inventions capable of evolving entirely new forms of being that wet biology alone can not reach. Our technologies are ultimately not contrary to life, but are in fact an extension of life, enabling it to develop yet more options and possibilities at a faster rate. Increasing options and possibilities is also known as progress, so in the end, what the technium brings us humans is progress.

N.S.: You talk about the emergent properties of the technium. What are some of these emergent properties? Are we capable of confirming their existence with data and writing down simple, explicable rules that predict the evolution and/or the behavior of the technium itself?

K.K.: One unexpected emergent property of the technium is that most inventions and innovations are co-invented multiple times, simultaneously and independently. That is, more than one person will honestly invent the next new thing about the same time. This means that the popular image of the lone mad inventor or heroic scientist is just wrong. For instance 23 other inventors created electric incandescent light bulbs prior to Thomas Edison. Edison is renowned primarily because he was the first to figure out the business model of electric lighting. Simultaneous independent invention is the norm, true for minor as well as major leaps like calculus, steam engines and the transistor. Because each and every technology is not a single standalone idea but a web of many ideas, the technium itself emerges as a significant partner in invention. Libraries, journals, communication networks, and the accumulation of other technologies help create the next idea, beyond the efforts of a single individual. If Alexander Graham Bell had not secured the patent for inventing the telephone, Elisha Gray would have gotten it because they both applied for the telephone patent on the same day (Feb 14, 1876). There is plenty of data and confirmation about this emergent phenomenon, and we can predict with pretty good accuracy that lone inventors will become increasingly rare, and that invention and innovation will increasingly operate at a higher institutional level.

To be even more precise, quantitative, and rule-ish, we’d need to have more than a single example of the technium. Right now we have only one technium and so we have an N=1 study, which yields meager reliable rules. But in pre-history, when there was scarce communication between the Americas, Europe, Asia, Africa, and Australia, we had a N=5 case. The sequence of inventions on each continent were highly correlated locally, with the order of 60 ancient technologies such as pottery, weaving, and dog domestication appearing in a similar pattern on each separate continent. We also see near-identical parallel inventions of tricky contraptions like slingshots and blowguns. However, because it was so ancient, we don’t have a lot of data for this behavior. What we would really like is to have a N=100 study of hundreds of other technological civilizations in our galaxy. From that analysis we’d be able to measure, outline, and predict the development of technologies. That is a key reason to seek extraterrestrial life.

I think if we did have a robust set of techniums to inspect we’d find emergent phenomena like the rampant replication we see on this planet. At the core of the origin of life, and its ongoing billion-year metabolism, is its ability to replicate and copy information accurately. Life copies itself to live, copies to grow, copies to evolve. Life wants to copy. We could say the same about the technium, particularly the informational technium we are currently swimming in. Anything digital that can be copied, will be copied. To perform any kind of communication, information will be replicated perfectly, again and again. To send a message from one part of the globe to another requires innumerable copies along the route to be made. When information is processed in a computer, it is being ceaselessly replicated and re-copied while it computes. Information wants to be copied. Therefore, when certain people get upset about the ubiquitous copying happening in the technium, their misguided impulse is to stop the copies. They want to stamp out rampant copying in the name of "copy protection,” whether it be music, science journals, or art for AI training. But the emergent behavior of the technium is to copy promiscuously. To ban, outlaw, or impede the superconductivity of copies is to work against the grain of the system. It is a losing game. It’s like trying to work against the propensity of life to replicate. The “rule” then, is to flow with the copies. The prediction would be that innovations, agents, companies, and laws that embrace the easy flow of copies will prevail, while the innovations, agents, companies, and laws that try to thwart liberated ubiquitous copies will ultimately not prevail. This is not the quantitative, precise kind of prediction we’d like to have, but this kind of general emergent trend is the best we’ll do with a sample size of N1.

N.S.: So let's talk about some of the current and near-future effects of the technium on our world. There's currently a big debate about how technology interfaces with the environment. On one side we have degrowthers, who think the environment -- including the climate, but also natural habitats -- can only be preserved by curbing economic growth, and thus see the impact of human technology on the natural world as fundamentally extractive. On the other side are the technologists, who hold that only technological innovation gives us a realistic chance of reducing our environmental footprint and averting truly disastrous climate change. What's your perspective on this debate?

K.K.: There is no question I favor the latter perspective: that while technology has gotten us into this mess (climate change) only technology can get us out of it. Only the technium (our technological system) is “big” enough to work at the global scale needed to fix this planetary sized problem. Individual personal virtue (bicycling, using recycling bins) is not enough. However the worry of some environmentalists is that technology can only contribute more to the problem and none to the solution. They believe that tech is incapable of being green because it is the source of relentless consumerism at the expense of diminishing nature, and that our technological civilization requires endless growth to keep the system going. I disagree.

In English there is a curious and unhelpful conflation of the two meanings of the word “growth.” The most immediate meaning is to increase in size, or increase in girth, to gain in weight, to add numbers, to get bigger. In short, growth means “more.” More dollars, more people, more land, more stuff. More is fundamentally what biological, economic, and technological systems want to do: dandelions and parking lots tend to fill all available empty places. If that is all they did, we’d be well to worry. But there is another equally valid and common use of the word “growth" to mean develop, as in to mature, to ripen, to evolve. We talk about growing up, or our own personal growth. This kind of growth is not about added pounds, but about betterment. It is what we might call evolutionary or developmental, or type 2 growth. It’s about using the same ingredients in better ways. Over time evolution arranges the same number of atoms in more complex patterns to yield more complex organisms, for instance producing an agile lemur the same size and weight as a jelly fish. We seek the same shift in the technium. Standard economic growth aims to get consumers to drink more wine. Type 2 growth aims to get them to not drink more wine, but better wine.

The technium, like nature, excels at both meanings of growth. It can produce more, rapidly, and it can produce better, slowly. Individually, corporately and socially, we’ve tended to favor functions that produce more. For instance, to measure (and thus increase) productivity we count up the number of refrigerators manufactured and sold each year. More is generally better. But this counting tends to overlook the fact that refrigerators have gotten better over time. In addition to making cold, they now dispense ice cubes, or self-defrost, and use less energy. And they may cost less in real dollars. This betterment is truly real value, but is not accounted for in the “more” column. Indeed a tremendous amount of the betterment in our lives that is brought about by new technology is difficult to measure, even though it feels evident. This “betterment surplus” is often slow moving, wrapped up with new problems, and usually appears in intangibles, such as increased options, safety, choices, new categories, and self actualization — which like most intangibles, are very hard to pin down. The benefits only become more obvious when we look back in retrospect to realize what we have gained. Part of our growth as a civilization is moving from a system that favors more barrels of wine, to one that favors the same barrels of better wine.

A major characteristic of sapiens has been our compulsion to invent things, which we have been doing for tens of thousands of years. But for most of history our betterment levels were flatlined, without much evidence of type 2 growth. That changed about 300 years ago when we invented our greatest invention -- the scientific method. Once we had hold of this meta-invention we accelerated evolution. We turned up our growth rate in every dimension, inventing more tools, more food, more surplus, more population, more minds, more ideas, more inventions, in a virtuous spiral. Betterment began to climb. For several hundred years, and especially for the last hundred years, we experience steady betterment. But that betterment — the type 2 growth — has coincided with massive expansion of “moreness.” We’ve exploded our human population by an order of magnitude, we’ve doubled our living space per person, we have rooms full of stuff our ancestors did not. Our betterment, that is our living standards, have increased alongside the expansion of the technium and our economy, and most importantly the expansion of our population. There is obviously some part of a feedback loop where increased living standards enables yearly population increases and more people create the technology for higher living standards, but causation is hard to parse. What we can say for sure is that as a species we don’t have much experience, if any, with increasing living standards and fewer people every year. We’ve only experience increased living standards alongside of increased population.

By their nature demographic changes unroll slowly because they run on generational time. Inspecting the demographic momentum today it is very clear human populations are headed for a reversal on the global scale by the next generation. After a peak population around 2070, the total human population on this planet will start to diminish each year. So far, nothing we have tried has reversed this decline locally. Individual countries can mask this global decline by stealing residents from each other via immigration, but the global total matters for our global economy. This means that it is imperative that we figure out how to shift more of our type 1 growth to type 2 growth, because we won’t be able to keep expanding the usual “more.” We will have to perfect a system that can keep improving and getting better with fewer customers each year, smaller markets and audiences, and fewer workers. That is a huge shift from the past few centuries where every year there has been more of everything.

In this respect “degrowthers” are correct in that there are limits to bulk growth — and running out of humans may be one of them. But they don’t seem to understand that evolutionary growth, which includes the expansion of intangibles such as freedom, wisdom, and complexity, doesn’t have similar limits. We can always figure out a way to improve things, even without using more stuff — especially without using more stuff! There is no limit to betterment. We can keep growing (type 2) indefinitely.

The related concern about the adverse impact of the technology on nature is understandable, but I believe, can also be solved. The first phases of agriculture and industrialization did indeed steamroll forests and wreck ecosystems. Industry often required colossal structures of high-temperature, high pressure operations that did not operate at human or biological scale. The work was done behind foot-thick safety walls and chain link fences. But we have "grown.” We’ve learned the importance of the irreplaceable subsidy nature provides our civilizations and we have begun to invent more suitable technologies. Industrial-strength nuclear fission power will eventually give way to less toxic nuclear fusion power. The work of this digital age is more accommodating to biological conditions. As kind of a symbolic example, the raw ingredients for our most valuable products, like chips, require ultra cleanliness, and copious volumes of air and water cleaner than we’d ever need ourselves. The tech is becoming more aligned with our biological scale. In a real sense, much of the commercial work done today is not done by machines that could kill us, but by machines we carry right next to our skin in our pockets. We continue to create new technologies that are more aligned with our biosphere. We know how to make things with less materials. We know how to run things with less energy. We’ve invented energy sources that reduce warming. So far we’ve not invented any technology that we could not successfully make more green.

We have a ways to go before we implement these at scale, economically, with consensus. And it is not inevitable at all that we will grab the political will to make these choices. But it is important to realize that the technium is not inherently contrary to nature; it is inherently derived from evolution and thus inherently capable of being compatible with nature. We can choose to create versions of the technium that are aligned with the natural world. Or not. As a radical optimist, I work towards a civilization full of life-affirming high technology, because I think this is possible, and by imagining "what could be" gives us a much greater chance of making it real.

N.S.: I really like that vision a lot. You and I are quite closely aligned on our basic techno-optimism, our view of growth, and our concept of the relationship between human civilization and nature. But I'd like to try to challenge this optimism a little bit. Since around 2010, there have been increasing concerns about the direction the technium has taken us -- toward smartphones that absorb all our attention and take us out of the world and foster loneliness, toward social networks that sow sociopolitical discord and create feelings of personal inadequacy. Do you think innovation took something of a wrong turn in the 2010s, or are these problems overstated?

K.K.: These problems are overstated. The thing to remember when evaluating new technologies is we have to always ask “compared to what?.” Mercury-based dental fillings statistically caused some harm, but compared to what? Compared to cavities, they were a miracle. We tend to give existing technologies a pass from the degree of scrutiny we give new technologies. Social media can transmit false information at great range at great speed. But compared to what? Social media's influence on elections from transmitting false information was far less than the influence of the existing medias of cable news and talk radio, where false information was rampant. Did anyone seriously suggest we should regulate what cable news hosts or call in radio listeners could say? Bullying middle schoolers on social media? Compared to what? Does it even register when compared to the bullying done in school hallways? Radicalization on YouTube? Compared to talk radio? To googling?

The complexity of social media is akin to biology. It is not a coincidence that we speak of things going viral. Figuring out what is going on with these new platforms, what is harmful, what is beneficial, is as challenging as determining what is best for our health. Human bodies have so many interacting variables, all difficult to isolate, that we can’t rely on a single or even a few studies to determine our best health practices. Initial, honest, well-crafted medical studies are often proven wrong, sometimes embarrassingly wrong, many studies later. In fact it may take hundreds of studies before we can say a result is “true." Social media is equally complex, with even more variables, and it is still an infant. We are trying to evaluate a baby that is roughly 250 months old, and hoping to predict what it will be good for when it grows up.

A further complication is that we are judging a class of technologies based on what kids do with them. Kids are inherently obsessive about new things, and can become deeply infatuated with stuff that they outgrow and abandon a few years later. So the fact they may be infatuated with social media right now should not in itself be alarming. Yes, we should indeed understand how it affects children and how to enhance its benefits, but it is dangerous to construct national policies for a technology based on the behavior of children using it.

Similarly, we should be wary of evaluating a technology within only one culture. So far, we are extremely biased because we have examined social media primarily in the US. There is little research on the effects — plus or minus — on users in other cultures. Since it is the same technology, inspecting how it is used in other parts of the world would help us isolate what is being caused by the technology and what is being caused by the peculiar culture of the US.

There are surely new problems generated by social media. We can not use something for hours a day, every day, and have it not affect us. We have hints, but don’t really know. As we discover how it works, a wise society would modulate how this technology is used — by adults and children. As we begin to understand its tendencies, harms and benefits, we can devise incentives to continually re-design the tech to enhance democracy and well-being. All this must be done on the fly, in real time, because what we have learned over the past 100 years is that we can’t figure out, and can’t predict, what technologies will be good for simply by thinking and talking about them. New technologies are so complex they have to be used on the street in order to reveal their actual character. We are likely to guess wrong at first, as we have been wrong in the past when trying to guess what a new technology meant. We can laugh now at the moral panics over the degrading nature of novels, cinema, sports, music, dancing, TV, and comic books (the latter two prohibited in our house when I was growing up), but we know prohibitions never work in the long term. We should engage with social media, because we can only steer technologies while we engage them. Without engagement we don’t get to steer.

N.S.: When you say "only we can steer technologies", who does the "steering"? Should government be regulating new technologies more heavily, and if so, how? It seems hard for users themselves -- ourselves! -- to steer these technologies. I've been a heavy Twitter user for years, but I've never managed to do much about its tendency toward misinformation and performative, attention-seeking aggression. No one else has either. How can we steer big platforms?

K.K.: There are 3 levels of steerage. Level 1, individually we (you) ARE steering Twitter when you decide to mute or not to mute, or ban or not ban. You are voting what you think is important by using it. Or some people vote by not using it. You don’t notice what difference you make because of the platform's humongous billions-scale. In aggregate your choices make a difference which direction it — or any technology — goes. People prefer to watch things on demand, so little by little, we have steered the technology to let us binge watch. Streaming happened without much regulation or even enthusiasm of the media companies. Street usage is the fastest and most direct way to steer tech.

Level 2, is regulation by governments. This can work, and is often necessary to steer. The challenge is premature regulation. The panic cycle for tech begins on the first bit of news about possible harms to anyone, and first response is a call to regulate. But as we just discussed, because it’s a newborn, it is easy — if not certain — that our first impressions about the tech are wrong, and thus early regulations often tend to brake more than steer. We have some good case examples of regulating tech in the right direction. We steered DDT away from being used as a plantation-scale pesticide (poisoning entire wildlife ecosystems), and redirected to be used judiciously, carefully, in small amounts in villages to eliminate mosquito borne malaria, saving the lives of many millions with minimum effect on ecosystems. That took years to accomplish, but the evidence was vivid. We should require more than precautionary type of evidence in order to use regulation to steer.

The third level of steerage is through innovation and entrepreneurship. When new problems are seen, new solutions are invented. Sometimes engineers in the host companies offer technical remedies, or shift directions. Often times solutions come from startups outside. Occasionally new directions are developed by the customers themselves. Vibrators instead of the cacophony of ringing bells on cell phones is one example of a marketplace technological solution. The marketplace needs regulation to keep it level, clean, optimal, and fertile for innovations to flourish. This is probably the more important role for regulation in steering.

It’s a messy process. And as messy as it has been to steer social media, it will be even messier to steer AIs and genetic engines, principally because they are so close to our identity as human and because we are so ignorant of what humans are good for. Consensus of a preferred direction will be very slow in coming. And slow should be mandatory regulation.

N.S.: In fact, that's a good segue into the topic of AI, which of course everyone is talking about, given the recent success of chatbots and AI art programs. Where do you land on the spectrum of enthusiasm. Does this new technology change everything, or is it overhyped? What do you expect to change as a result of the new efflorescence of AI? Will the relationship between humanity and the technium fundamentally alter?

K.K.: Despite the relentless hype, I think AI overall is underhyped. The long-term effects of AI will affect our society to a greater degree than electricity and fire, but its full effects will take centuries to play out. That means that we’ll be arguing, discussing, and wrangling with the changes brought about by AI for the next 10 decades. Because AI operates so close to our own inner self and identity, we are headed into a century-long identity crisis.

This span is particularly notable because we have been discussing the effects of AI for 100 years already. In fact, never before have humans so thoroughly rehearsed something as far ahead as AI. Long before it arrives, we’ve been imagining its pros and cons, and trying to anticipate it for several generations. This serious rehearsal is an improvement in our culture, and a pattern we should continue for other technologies like genetic engineering, the metaverse, and so on. The upside to a long rehearsal is that upon arrival, we should not be too surprised. The downside to a long rehearsal is that there are more ways something goes wrong than right, and we’ve had time to think of all of the horrible stuff, so that the positive conjectures feel mythical and unreal.

But now, here are chatbots, commercially available. However, in 30 years we will look back to 2023 and everyone then will agree that while something is happening with artificial smartness, we do not have anything like AI now. What we tend to call AI, will not be considered AI years from now. One useful corollary of this is that from the perspective of looking back 30 years hence, there are no AI experts today. This is good news for anyone starting out right now, because you have as much chance as anyone else of making breakthroughs and becoming the reigning experts.

Nonetheless, right now machine learning is overhyped. It is not sentient, and not as smart as it seems. What we are discovering is that many of the cognitive tasks we have been doing as humans are dumber than they seem. Playing chess was more mechanical than we thought. Playing the game Go is more mechanical than we thought. Painting a picture and being creative was more mechanical than we thought. And even writing a paragraph with words turns out to be more mechanical than we thought. So far, out of the perhaps dozen of cognitive modes operating in our minds, we have managed to synthesize two of them: perception and pattern matching. Everything we’ve seen so far in AI is because we can produce those two modes. We have not made any real progress in synthesizing symbolic logic and deductive reasoning and other modes of thinking. It is those “others” that are so important because as we inch along we are slowly realizing we still have NO IDEA how our own intelligences really work, or even what intelligence is. A major byproduct of AI is that it will tell us more about our minds than centuries of psychology and neuroscience have.

There are books full of lessons waiting to be said about about AI as it is being born, so let me state just a few provocative points about what I expect:

•We should prepare ourselves for AIs, plural. There is no monolithic AI. Instead there will be thousands of species of AIs, each engineered to optimize different ways of thinking, doing different jobs (better than a general AIs could do). Most of these AIs will be dumbsmarten: smart in many things and stupid in others. Expect frustration about how stupid they can be while being so smart.

• Theoretically any computer can emulate any other computer, but in practice it matters what substrate a computer runs on. No matter how fast or “smart” an AI may be, as long as it runs on silicon it will remain an alien. Its intelligence will be brilliant, but alien. Its humor will be sharp, but a little off. Its creativity will be intense, but a little otherworldly. The best framework for understanding complex AIs is to think of them as artificial aliens. Think of a robot Spock, super smart, but not quite human.

• Consciousness is a liability and not an asset in an AI. It is distracting and dangerous. We want our AIs to just drive, and not get anxious. Many expensive AIs will be advertised as “consciousness-free.”

• The relationship AIs will have with us will tend towards being partners, assistants, and pets, rather than gods. This first round of primitive AI agents like ChatGPT and Dalle are best thought of as universal interns. It appears that the millions of people using them for the first time this year are using these AIs to do the kinds of things they would do if they had a personal intern: write a rough draft, suggest code, summarize the research, review the talk, brainstorm ideas, make a mood board, suggest a headline, and so on. As interns, their work has to be checked and reviewed. And then made better. It is already embarrassing to release the work of the AI interns as is. You can tell, and we’ll get better at telling. Since the generative AIs have been trained on the entirety of human work — most of it mediocre — it produces “wisdom of the crowd”-like results. They may hit the mark but only because they are average.

• Because AIs are being trained on average human work, they exhibit the biases, prejudices, weaknesses, and vices of the average human. But we are not going to accept that. Nope. We want the ethics and values of AIs to be better than ours! They have to be less racist, less sexist, less selfish than we are on average. It is NOT difficult to program in ethical and moral guidelines into AIs — it is just more code. The challenge is that we humans have no consensus on what we mean by “better than us,” and exactly who “us” is. The problem is not the AIs. The problem is that the AIs have illuminated our own shallow and inconsistent ethics — even at our best. So making AIs better than us is a huge project.

• I am not aware of any person who has lost their job to an AI so far. There may be a few professions — like the person paid to transcribe a talk into text — that will go away, but most jobs will shift their tasks to accommodate the emerging power of AI. Most of the worry about AI unemployment so far is third-person worry — people imagine some other person getting fired, not themselves.

• Different AIs will have different personalities. We already see this with image generators; some artists prefer working with one rather than another. It takes an extremely close intimacy to get your intern AI to help you produce great work. Some people are 10x and 100x better than others with these tools. They have become AI whisperers. Other people are repelled by this alienness and want nothing to do with it. That is fine. But 90% of AIs will never be encountered by anyone. That is because the bulk of AIs will run hidden in back offices. They will metaphorically reside in the walls so to speak, like plumbing and electrical wires -- vital but out of mind. This invisibility is the mark of the most successful technologies — to be ubiquitous but not seen.

Before this becomes a book, I’ll stop there.

N.S.: I share your optimism about AI. But let's briefly talk about the times when futurism fails. Two years ago, a lot of people in the tech world were talking breathlessly about -- and throwing very large amounts of money at -- "web3", a catch-all name for crypto stuff. We heard wide-eyed tales about how crypto would usher in an era of permissionless commerce, a new ownership society, financial independence, a new efflorescence of online creativity. Instead, essentially everything created in that crypto boom turned out to be either a Ponzi scheme, a pump-and-dump scam, or simply wildly overoptimistic. To cite a less dramatic example, the gig economy was supposed to revolutionize human labor and income and land use, but its impact, while real, has been much more modest than people expected. Is there any systematic reason these technological visions fell short? Is it possible to tell in advance what new limbs our technium will see fit to graft onto its body? Or is it just a matter of taking a lot of shots on goal and seeing what works?

K.K.: The baseball oracle once said: predictions are hard to make, especially about the future. I think it is hard enough to predict the present. If we could predict the present, we’d be half done. Most of my work is trying to see what is actually happening right now.

Futurism fails all the time, but I actually think we are getting better at it. For one, we tend to over estimate change in the short term, and underestimate it over the longer term, and I see evidence of us beginning to learn that lesson and shift our expectations. Two, we’ve learned to expect that even nice technologies bite back. Now from the get-go we assume there will be significant costs and harms of anything new, which was not the norm in my parent's generation. Scenario planning, once esoteric, is now standard corporate planning procedure. Scenarios are less about predicting exactly what will happen and more about imagining the range of possible futures so that you are not surprised when one of them happens, and you can use the scenarios to generate contingency plans — what would we do if the world headed in this direction? This is a giant step forward in managing the future.

This does not prevent a future fail like what happened with crypto. The astronomical volume of money and greed flowing through this frontier overwhelmed and disguised whatever value it may have had. If you prohibited the mention of “money”, “making money” or “saving money” from any discussion of crypto, it was always a very short conversation. I suspect there are some powerful ideas and tech possible using blockchain, but these value propositions are not going to show up until crypto is seen as an expense instead of a way of making money. It has to be valuable *while* it loses money, and that has not happened yet.

I think the fail of crypto is less a failure of futurism than a flop in culture in general. When I first experienced virtual reality in 1989, I felt sure the world would change in the next 5 years. It’s been 30 years now and the state of VR is about the same. I was part of a small group of techno-enthusiasts who thought believable VR was imminent, and got it wrong. What’s been different about crypto is that the main boosters have not been a small group of techno-enthusiasts. Rather crypto has been promoted sky high by athletes, celebrities, shoe companies, day traders, politicians, and hustlers. Half of the usual technology evangelists, like myself, have been silent on crypto, or openly skeptical of it. For every tech promoter of crypto there’s been a very educated tech criticism of it. And I don’t mean the usual tech criticism of “this is bad,” I mean the tech criticism of “this does not work.” (I would cautiously add the word “yet”.) So to half of the tech and futurist community, it is only a half-fail. And taking to heart the lesson #1 above, crypto still has potential to be revolutionary in the long run. The sweet elegance of blockchain enables decentralization, which is a perpetually powerful force. This tech just has to be matched up to the tasks — currently not visible — where it is worth paying the huge cost that decentralization entails. That is a big ask, but taking the long-view, this moment may not be a failure. I would say the same about the gig economy — let’s give it more time to judge; it’s barely been around 5,000 days.

N.S.: I usually close these interviews by asking what someone is working on right now. In your case, I feel like I'll just read whatever it is when it comes out! So today I'll switch it up a bit. What do you think young people should be working on right now? What's exciting, new, and important in the world of 2023?

K.K.: My generic career advice for young people is that if at all possible, you should aim to work on something that no one has a word for. Spend your energies where we don’t have a name for what you are doing, where it takes a while to explain to your mother what it is you do. When you are ahead of language, that means you are in a spot where it is more likely you are working on things that only you can do. It also means you won’t have much competition.

Possible occupations that are ahead of our language would be person-that-sits-with-you-when-you-are-ill, story-teller-for-the-company, AI-whisper, media-fact-check-verifier, wireless-troubleshooter, eugenic-adviser-diviner, roaming-robot-repair-person, influence-matchmaker, polyandry-therapist, applied-historian-in-residence, and maybe, full-time-note-taker.

My second bit of counsel is anti-career advice (taken from my new book Excellent Advice) and it goes like this:

Your 20s are the perfect time to do a few things that are unusual, weird, bold, risky, unexplainable, crazy, unprofitable, and looks nothing like “success.” The less this time looks like success, the better it will be as a foundation. For the rest of your life these orthogonal experiences will serve as your muse and touchstone, upon which you can build an uncommon life.

Kevin Kelly is a very thought-provoking writer but he doesn't really address your question about the isolating impact of social media. I don't find his comparisons with cable TV and radio encouraging because the sheer reach and instantaneous effects of social media signal something qualitatively and not just quantitatively new. Throw in the long-term mental health effects of short attention spans and echo chambers and I don't think you can quickly dismiss social media problems as overstated.

Noah, it was a great interview. You let KK talk and he was interesting and provocative. A lot to think about.