A virtuous cycle of worker power and technology?

What if higher wages drive faster productivity growth?

The other day I ordered at a restaurant on my smartphone. No waiter came by to ask me if I was ready to order. I scanned a QR code on a piece of paper taped to a wooden post; this brought up the menu on my phone, and I simply indicated what I wanted. As if by magic, a server appeared a few minutes later with the food. During my meal, no one wandered by to ask me if I “was still working on that”; when I wanted more food, I just used my phone again. I’m sure I’m one of many millions of Americans who’s learning to order food this way, as a result of the understaffing and social distancing rules imposed by the Covid pandemic.

While I was ordering this way, I kept thinking over and over that this shift is a real game-changer in terms of productivity. Let people order food on their phones, and the number of wait staff you need to deliver the same service goes way down. It’s barely more onerous for the customer if at all, and it eliminates the need to have human beings constantly sashaying around the establishment, eyeing how much diners have eaten.

So I guess I wasn’t too surprised when I saw, via Erik Brynjolfsson and Georgios Petropoulos, that labor productivity is growing at rates not seen since the previous century:

What IS surprising is that this growth has accelerated in the first quarter of 2021, as Americans have started coming back to work en masse. If productivity gains had been purely a function of service establishments being forced to do stretch and do more with fewer workers because of Covid, we might have expected to see a reversal as workers came back to work en masse; instead, productivity is growing even faster.

Now, it’s important not to put too much weight on one quarter’s worth of data. This is a noisy time series with plenty of mismeasurement, and there are bound to be revisions (in fact, looking at the non-seasonally-adjusted numbers shows a slightly more modest increase in Q1). But coupled with my observations on the ground, the change seems real; we can see the actual labor-saving technologies being implemented right in front of our eyes. Nor am I the only one who sees this. Writing in the WSJ, Greg Ip reports:

Industries accounting for a third of the job loss since the start of the pandemic have increased output, including retailing, information, finance, construction, and professional and business services, said Jason Thomas, head of global research at private-equity manager Carlyle Group.

“This recession took on a life of its own by leading to greater remote work, greater reliance on technology,” Mr. Thomas said. Executives began to ask “hard questions: Why do we have so much floor space? Are we sure our cost base makes so much sense? Why were we taking so many intra-office trips? This experience has just revealed how ignorant you were about the frontiers of technology that you could exploit.”

So if employers really are investing in labor-saving technology, the question is: Why? Part of it is surely that the pandemic simply nudged managers to reconsider how they do things; inertia in the business world is strong. But another possible explanation is that workers are becoming more powerful and demanding higher wages. In the NYT, Neil Irwin notes some evidence that workers are gaining the upper hand in the labor market:

The “reservation wage,” as economists call the minimum compensation workers would require, was 19% higher for those without a college degree in March than in November 2019, a jump of nearly $10,000 a year, according to a survey by the Federal Reserve Bank of New York…

A survey of human resources executives from large companies conducted in April by The Conference Board, a research group, found that 49% of organizations with a mostly blue-collar workforce found it hard to retain workers, up from 30% before the pandemic.

With workers demanding higher wages, there could be an incentive for companies to invest in technologies that will economize on labor — like QR code ordering at restaurants.

One problem for this narrative is that although wages are rising in dollar terms, prices are also accelerating, meaning that real wages actually fell in May. But if employers expect inflation to be temporary and employees’ wage demands to be long-lasting, they will probably feel the pressure to invest in automation.

To many, businesses replacing human workers with robots in order to avoid paying higher wages will sound like a very negative thing. But in fact, there’s a theory that this is how economies grow — that high wages spur technological progress that pushes up productivity, which then justifies even higher wages, creating a virtuous cycle. In fact, some people think this is how developed nations got rich in the first place.

The Allen-Romer theory of technology improvement

Why did the Industrial Revolution begin in Britain instead of in China, which had a similar level of technological sophistication? Or Germany, for that matter? Economic historian Robert Allen had a theory. Wages in Britain, he wrote, were significantly higher than wages elsewhere, due to the country’s extensive foreign trade (which, yes, was partly due to colonialism). Higher wages meant that businesses — mines, ironworks, and so on — needed to find a way to bring costs down. So they invested in labor-saving technologies, like James Watt’s famous steam engine, that took advantage of cheap and plentiful local coal deposits. Those investments ignited a technological revolution that eventually created the modern industrialized world.

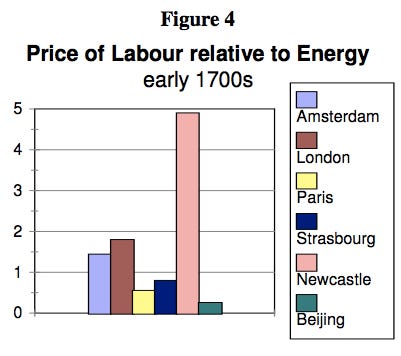

Meanwhile, Allen asserts, wages in China were much lower — China had machinery that was advanced for its time, but businesses found it more profitable to simply hire low-paid workers instead. Here, via Brad DeLong, is a chart showing how Britain’s economy was biased toward coal and China’s was biased toward cheap labor:

Given those prices, investing in technology would have been a no-brainer for the people in North England.

Now, this theory is far from settled science. There are plenty of smart people who critique Allen’s theory, including his wage data (do we really know what people were paid back in the 1700s?). But it’s certainly an important theory that can’t be dismissed. And the idea that expensive labor spurs innovation has been the basis for various other theories of growth over the years, including a 1987 theory by Paul Romer:

I also assumed that an increase in the total supply of labor causes negative spillover effects because it reduces the incentives for firms to discover and implement labor-saving innovations that also have positive spillover effects on production throughout the economy.

Note the term “spillover effects”. This idea is really the key. Basically, suppose you’re a business, and labor suddenly becomes really expensive, so you decide to install an automated ordering system for the customers. Then you think “Hmm, wait a second, could I also use an automated system for the wait staff, hosts, and chefs to communicate instead of having to walk over and talk to each other?” So they go around looking for more communication tools to buy. Software companies realize this is a big market, and start making more and better restaurant productivity tools. Along the way they develop cool new methods of remote communication that other software companies adapt for completely different markets, like factories or big-box retailers. Innovation leads to more innovation. That generates productivity gains, which in turn helps to boost wages in the long run.

High wages lead to even higher wages — a virtuous cycle indeed.

But if theories like Allen’s and Romer’s are right, that virtuous cycle needed something other than innovation in order to force up wages in the first place. That something else is worker power.

What increases worker power?

There are several things that can increase workers’ ability to bargain for higher wages. Robert Allen thought that Britain’s success at international trade had raised wages in North England. But there are many other possible things to provide that initial wage boost. For example:

1) Full employment. When aggregate demand is high, labor markets get tight, and wages go up. If this causes a burst of innovation, it would validate the idea that high levels of aggregate demand lead to faster productivity growth, rather than the other way around — an idea sometimes known as “Verdoorn’s Law”. So fiscal and monetary policies to keep the economy humming along at full employment could ultimately accelerate the economy’s long-term growth trend.

2) Unions. Unions don’t always push wages up — Germany’s industrial unions often do the opposite, in fact, holding down wages to help German companies maintain their international competitiveness. But throughout America’s history, unions have primarily acted to lower economic inequality by bargaining for higher wages. If Allen’s theory is right, this might have helped spur productivity gains in the long term — especially the IT revolution that got started in the late 70s and 80s.

3) Minimum wage laws. The late 2010s were a very good time for low-wage workers in America, who saw considerable raises. Part of that was doubtless due to the sheer length of the expansion that dug us out of the hole of the Great Recession; at the end of long expansions, wages always tend to rise. But part was due to a wave of minimum wage increases across the country, many of them motivated by the “Fight for $15” campaign. Minimum wage opponents often warn that setting higher minimum wages will cause companies to replace humans with technology, but if the spillover effects theorized by Allen, Romer, etc. are true, then this “threat” is actually something we want to happen!

4) Outside options. Special pandemic unemployment benefits — currently $300/week until the beginning of September in states that are still participating — provide a powerful outside option for workers. The pandemic isn’t quite over, so why risk going back to work quite yet, especially when the government will support you to some degree? To business-minded conservatives, the idea that the government is paying people not to work evokes horror. But if this is driving up (nominal) wages and causing businesses to invest in labor-saving technology, is three more months of a good outside option such a bad thing?

So there are lots of things that increase worker power relative to employers. If we believe that high wages spur investment in technology that eventually leads to productivity growth and even higher wages, then we shouldn’t worry as much about things like fiscal and monetary stimulus, unions, minimum wage laws, and special pandemic relief programs.

What about jobs?

But now we come to the question that’s on lots of people’s minds: Isn’t automation bad for workers? For years now, we’ve been bombarded with the narrative that humankind is on the verge of being rendered obsolete by robots, A.I., and other forms of automation. Basic income proponents sometimes tell this story as a way to motivate us to spread the wealth. Minimum wage opponents try to scare us with this story, convincing us that only by lowering their pay and living standards can workers at the bottom of the pyramid hope to stay ahead of the looming robot takeover for a few more years. Always, the refrain is the same: Labor-saving technology is a threat to labor.

So far, no robot apocalypse has materialized; most people still have jobs. Among prime-age people, the employment rate on the eve of Covid was as high as in the mid-2000s boom, and close to the record set in the late 1990s:

Nevertheless, stories of investments in labor-saving technology are going to make many people very worried. What will the servers do when diners can just push a button? And so on.

These worries are very hard for supporters of technological innovation and progress to answer, because we don’t always know exactly what people will end up doing. We have a few ideas — the care economy, warehouses, going back to school and getting higher-skilled, jobs, etc. But lots of people who stop being waiters in restaurants will end up doing things that it’s hard for us to predict.

The thing is, humankind has had many waves of technological innovation before, and somehow there is still plenty of work for everyone to do. Some craftspeople did suffer economically from the introduction of industrial technology in Britain, but living standards rose at an accelerating pace; pretty much no British person today would go back to the world of 1700 just so they could get a job as a weaver.

I think Americans’ terror of automation is another example of the scarcity mindset into which we’ve fallen in recent decades. Like timid mice guarding our last crumb of cheese, we look to any change as a source of dread rather than a source of opportunity.

But we don’t have to be like that. Believing in technological progress is about believing in the potential of humankind. Do we really think that QR code ordering in restaurants will be the innovation that finally renders humans obsolete? Do we really think that wandering around a restaurant saying “Are you still working on that?” was the last thing that the average human being was good for, and after this we’ll never be able to find something new for people to do?

I don’t believe that. I believe we’re only at the beginning of our quest to unlock average people’s true economic potential.

Morning Noah,

Observations and comments

I am getting more recruiters than ever trying to poach me than ever. (Skilled blue collar... college not required). Just had one hit me up with $45 per hour with guaranteed OT and generous Per Diem as an opening bid.

My company in general is always looking for skilled technicians. Pays well, still always looking.

My daughter is going to school for mechatronics. She will be right hand of our robot overlords.

Brother in law owns fast food restaurant. Business booming. Struggling to find workers. Average wage has gone up $3 in last 6 months. He is investing in tech to reduce labor as well. Digital terminals, headsets, etc... quite high capital costs, but allows him to reduce worker per shift.

Have a great day.

My ex is Chinese. In 2005 we spent a month there and I noticed that they used an amazing amount of labor. At restaurants there would be three hosts to greet you and at least two waiters at the table. At department stores there was one person to take your money and another to hand write a receipt. Workers on retail floors had a lot of latitude to negotiate price.

I got a haircut at an upscale salon in a fashionable area and *five* people worked on my (very short and simple) hair. There was one person to do a dry wash, another to do a wet wash, another to dry my hair, another to do the actual cutting, and a final one for me to pay.

Total cost? $6

I remember asking someone about why there wasn’t more technology at the point of sale and he said it was bad side they had so many people it was cheaper just to hire another body.

Of course, that was almost 16 years ago and I imagine there’s a lot more technology in use these days, but maybe not?